Currently, the maximum file size with disabled chunking is 50MB. One of the workarounds is to use Azure functions in order to transfer the files from one container to another.

Below is the sample Python Code that worked for me when I'm trying to transfer files from One container to Another

from azure.storage.blob import BlobClient, BlobServiceClient

from azure.storage.blob import ResourceTypes, AccountSasPermissions

from azure.storage.blob import generate_account_sas

from datetime import datetime,timedelta

connection_string = '<Your Connection String>'

account_key = '<Your Account Key>'

source_container_name = 'container1'

blob_name = 'samplepdf.pdf'

destination_container_name = 'container2'

# Create client

client = BlobServiceClient.from_connection_string(connection_string)

# Create sas token for blob

sas_token = generate_account_sas(

account_name = client.account_name,

account_key = account_key,

resource_types = ResourceTypes(object=True),

permission= AccountSasPermissions(read=True),

expiry = datetime.utcnow() + timedelta(hours=4)

)

# Create blob client for source blob

source_blob = BlobClient(

client.url,

container_name = source_container_name,

blob_name = blob_name,

credential = sas_token

)

# Create new blob and start copy operation

new_blob = client.get_blob_client(destination_container_name, blob_name)

new_blob.start_copy_from_url(source_blob.url)

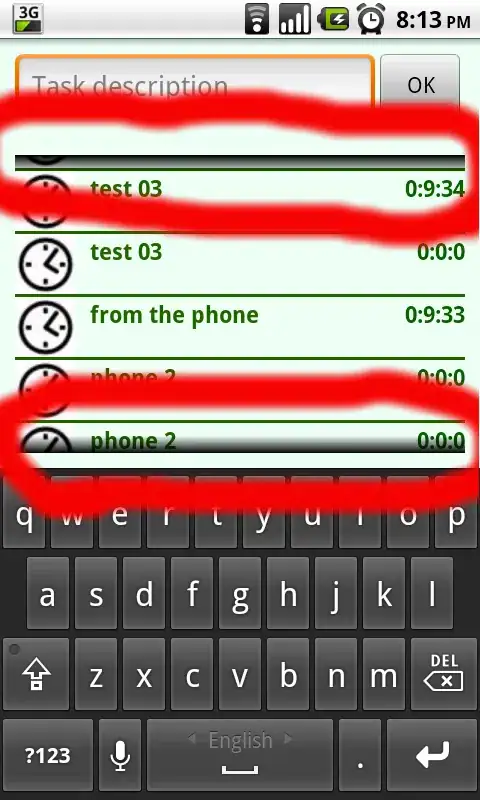

RESULT:

REFERENCES:

- General Limits

- How to copy a blob from one container to another container using Azure Blob storage SDK