I need to consolidate data from 30 databases and 20 tables. My first attempt was to create one data flow for each table. Each data flow would have 30 db sources which takes data from 1 table from each db and then inserts in 1 destination. This doesn't work because there is no enough memory. I tried to filter data to ignore data that's not going to be used - it didn't help. These tasks should perform on daily basis. Whole Consolidation db is deleted and then new data is inserted, which means that huge amounts of data are inserted daily. Anyone has a suggestion of how to handle memory problem?

The only solution for now I see is to create 20 packages. Each package would have 30 data flows. Each data flow would take data from only one database. But then again, I don't know what is the order of execution? Will data flows of one package start to execute at the same time or they go one by one?

EDIT:

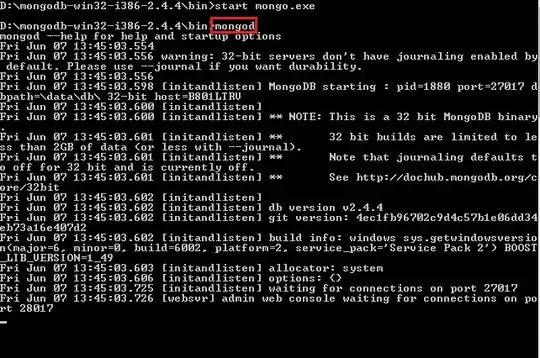

This is how I did it... here's screenshot of Control flow:

Screenshot of Data Flow:

I created one package for one table from all databases. In this case, each dataflow takes 4-year old data from a table and imports to a source database. The problem is that I have one table in one database which has too much data and it returns error:

The buffer manager failed a memory allocation call for 10484608 bytes, but was unable to swap out any buffers to relieve memory pressure. 20 buffers were considered and 20 were locked. Either not enough memory is available to the pipeline because not enough are installed, other processes were using it, or too many buffers are locked.

Any suggestions?