there are many ways to do this ... the approach I used is to iterate across each pixel in the input image ... assign to each pixel in order a unique frequency ... the range of frequencies can be arbitrary lets vary it across the human audible range from 200 to 8,000 Hertz ... divide this audio freq range by the number of pixels which will give you a frequency increment value ... give the first pixel 200 Hertz and as you iterate across all pixels give each pixel a frequency by adding this freq increment value to the previous pixel's frequency

while you perform above iteration across all pixels determine the light intensity value of the current pixel and use this to determine a value normalize from zero to one which will be the amplification factor of the frequency of a given pixel

now you have a new array where each element records the light intensity value and a frequency ... walk across this array and create an oscillator to output a sin curve at an amplitude driven from the amplification factor at the frequency of the current array element ... now combine all such oscillator outputs and normalize into a single aggregate audio

this aggregate synthesized output audio is the time domain representation of the input image which is your frequency domain starting point

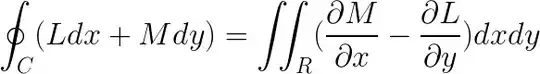

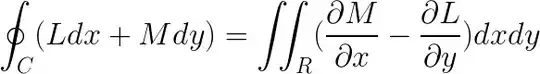

beautiful thing is this output audio is the inverse Fourier Transform of the image ... anyone fluent in Fourier Transform will predict what comes next namely this audio can then be sent into a FFT call which will output a new output image which if you implement all this correctly will match more or less to your original input image

I used golang not python however this challenge is language agnostic ... good luck and have fun

there are several refinements to this ... a naive way to parse the input image is to simply zig zag left to right top to bottom which will work however if you use a Hilbert Curve to determine which pixel comes next your output audio will be better suited to people listening especially when and if you change the image resolution of out original input image ... ignore this embellishment until you have it working

far more valuable than the code which implements this is the voyage of discovery endured in writing the code ... here is the video which inspired me to embark on this voyage https://www.youtube.com/watch?v=3s7h2MHQtxc # Hilbert's Curve: Is infinite math useful?

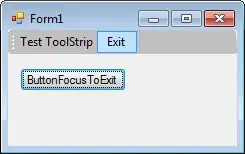

here is a sample input photo

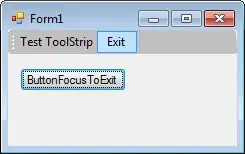

here is the output photo after converting above image into audio and then back into an image

once you get this up and running and are able to toggle from frequency domain into the time domain and back again you are free to choose whether you start from audio or an image