I have a mixed data that is taken mainly from Wikidata, it contains Arabic fields, English fields, and the data in Arabic and English is mixed with other languages such as Greek characters, Hindi numbers, Russian maybe, emojis, Cyrillic letter:

Example of possible data:

ڱ

١٩٣٣

Белгородский государственный университет

The sample above shows like ? in my database, I tried to use different collation choices like

ARABIC_CI_AS

Arabic_CI_AI_KS

Arabic_100_CI_AI_KS_WS

My table schema:

CREATE TABLE [dbo].[NED_Corpus_True_Expanded]

(

[id] [int] IDENTITY(1,1) NOT NULL,

[Wikidata_IQ] [nvarchar](50) NULL,

[WikidataType] [nvarchar](500) NULL,

[WikidataArabicLabel] [nvarchar](max) NULL,

[WikidataEnglishLabel] [nvarchar](max) NULL,

[WikidataAraliases] [nvarchar](max) NULL,

[WikidataEnaliases] [nvarchar](max) NULL,

[WikidataArabicDescrption] [nvarchar](max) NULL,

[WikidataEnglishDescrption] [nvarchar](max) NULL,

[WikidataMainCategory] [nvarchar](max) NULL,

[WikipediaArabicLabel] [nvarchar](max) NULL,

[WikipediaArabicDescrption] [nvarchar](max) NULL,

[Label] [float] NULL

) ON [PRIMARY] TEXTIMAGE_ON [PRIMARY]

GO

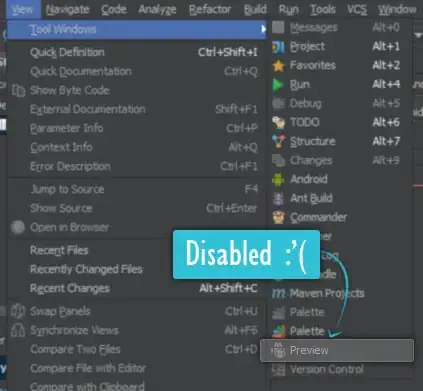

But non-works for the mixed data, here is a screenshot of what the data looks like:

I inserted the data from CSV into SQL table using the following script:

import pyodbc

import pandas as pd

# insert data from csv file into dataframe.

# working directory for csv file: type "pwd" in Azure Data Studio or Linux

# working directory in Windows c:\users\username

df = pd.read_csv("ned_true_new.csv")

df.fillna("", inplace=True)

df.info()

# Some other example server values are

# server = 'localhost\sqlexpress' # for a named instance

# server = 'myserver,port' # to specify an alternate port

#password = 'yourpassword'

#cnxn = pyodbc.connect('DRIVER={SQL Server};SERVER='+server+';DATABASE='+database+';UID='+username)

cnxn = pyodbc.connect("Driver={SQL Server Native Client 11.0};"

"Server=srvr;"

"Database=db;"

"Trusted_Connection=yes;")

cursor = cnxn.cursor()

# Insert Dataframe into SQL Server:

for index, row in df.iterrows():

#print(row)

cursor.execute('''INSERT INTO [dbo].[NED_Corpus_True_Expanded]

([Wikidata_IQ]

,[WikidataType]

,[WikidataArabicLabel]

,[WikidataEnglishLabel]

,WikidataAraliases

,WikidataEnaliases

,[WikidataArabicDescrption]

,[WikidataEnglishDescrption]

,[WikidataMainCategory]

,[WikipediaArabicLabel]

,[WikipediaArabicDescrption]

,[Label])

VALUES (?,?,?,?,?,?,?,?,?,?,?,?)''', row.id, row.type, row.arlabel,row.enlabel,row.araliases, row.enaliases,row.ardescription, row.endescription, row.maincategory, row.arwiki,row.wikiDescrption,row.Label )

cnxn.commit()

cursor.close()