I am currently working on the MNIST dataset. My model has overfit the training data and I want to reduce the overfitting by using weight_decay. I am currently using 0.1 as the value for weight_decay which is giving me bad results as my validation loss and training loss are not decreasing. However, I want to experiment with different values for weight_decay. So that i can plot the different amounts of weight_decay on the x-axis and the performance of validation set on the y-axis. How do i do that? store the values in a list and use a for loop to iterate through? Below is the code that i have tried until now.

I am currently working on the MNIST dataset. My model has overfit the training data and I want to reduce the overfitting by using weight_decay. I am currently using 0.1 as the value for weight_decay which is giving me bad results as my validation loss and training loss are not decreasing. However, I want to experiment with different values for weight_decay. So that i can plot the different amounts of weight_decay on the x-axis and the performance of validation set on the y-axis. How do i do that? store the values in a list and use a for loop to iterate through? Below is the code that i have tried until now.

class NN(nn.Module):

def __init__(self):

super().__init__()

self.layers = nn.Sequential(

nn.Flatten(),

nn.Linear(784,4096),

nn.ReLU(),

nn.Linear(4096,2048),

nn.ReLU(),

nn.Linear(2048,1024),

nn.ReLU(),

nn.Linear(1024,512),

nn.ReLU(),

nn.Linear(512,256),

nn.ReLU(),

nn.Linear(256,128),

nn.ReLU(),

nn.Linear(128,64),

nn.ReLU(),

nn.Linear(64,32),

nn.ReLU(),

nn.Linear(32,16),

nn.ReLU(),

nn.Linear(16,10))

def forward(self,x):

return self.layers(x)

def accuracy_and_loss(model, loss_function, dataloader):

total_correct = 0

total_loss = 0

total_examples = 0

n_batches = 0

with torch.no_grad():

for data in testloader:

images, labels = data

outputs = model(images)

batch_loss = loss_function(outputs,labels)

n_batches += 1

total_loss += batch_loss.item()

_, predicted = torch.max(outputs, dim=1)

total_examples += labels.size(0)

total_correct += (predicted == labels).sum().item()

accuracy = total_correct / total_examples

mean_loss = total_loss / n_batches

return (accuracy, mean_loss)

def define_and_train(model,dataset_training, dataset_test):

trainloader = torch.utils.data.DataLoader( small_trainset, batch_size=500, shuffle=True)

testloader = torch.utils.data.DataLoader( dataset_test, batch_size=500, shuffle=True)

values = [1e-8,1e-7,1e-6,1e-5]

model = NN()

for params in values:

optimizer = torch.optim.Adam(model.parameters(), lr=0.001, weight_decay = params)

train_acc = []

val_acc = []

train_loss = []

val_loss = []

for epoch in range(100):

total_loss = 0

total_correct = 0

total_examples = 0

n_mini_batches = 0

for i,mini_batch in enumerate(trainloader,0):

images,labels = mini_batch

optimizer.zero_grad()

outputs = model(images)

loss = loss_function(outputs,labels)

loss.backward()

optimizer.step()

n_mini_batches += 1

total_loss += loss.item()

_, predicted = torch.max(outputs, dim=1)

total_examples += labels.size(0)

total_correct += (predicted == labels).sum().item()

epoch_training_accuracy = total_correct / total_examples

epoch_training_loss = total_loss / n_mini_batches

epoch_val_accuracy, epoch_val_loss = accuracy_and_loss( model, loss_function, testloader )

print('Params %f Epoch %d loss: %.3f acc: %.3f val_loss: %.3f val_acc: %.3f'

%(params, epoch+1, epoch_training_loss, epoch_training_accuracy, epoch_val_loss, epoch_val_accuracy))

train_loss.append( epoch_training_loss )

train_acc.append( epoch_training_accuracy )

val_loss.append( epoch_val_loss )

val_acc.append( epoch_val_accuracy )

history = { 'train_loss': train_loss,

'train_acc': train_acc,

'val_loss': val_loss,

'val_acc': val_acc }

return ( history, model )

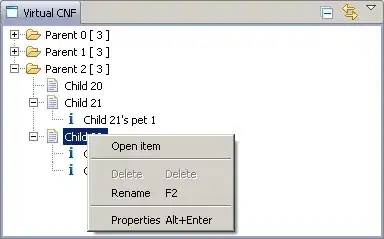

This is the plot that I am getting. Where am I going wrong?