I have two DAGs in my airflow scheduler, which were working in the past. After needing to rebuild the docker containers running airflow, they are now stuck in queued. DAGs in my case are triggered via the REST API, so no actual scheduling is involved.

Since there are quite a few similar posts, I ran through the checklist of this answer from a similar question:

- Do you have the airflow scheduler running?

Yes!

- Do you have the airflow webserver running?

Yes!

- Have you checked that all DAGs you want to run are set to On in the web ui?

Yes, both DAGS are shown in the WebUI and no errors are displayed.

- Do all the DAGs you want to run have a start date which is in the past?

Yes, the constructor of both DAGs looks as follows:

dag = DAG(

dag_id='image_object_detection_dag',

default_args=args,

schedule_interval=None,

start_date=days_ago(2),

tags=['helloworld'],

)

- Do all the DAGs you want to run have a proper schedule which is shown in the web ui?

No, I trigger my DAGs manually via the REST API.

- If nothing else works, you can use the web ui to click on the dag, then on Graph View. Now select the first task and click on Task Instance. In the paragraph Task Instance Details you will see why a DAG is waiting or not running.

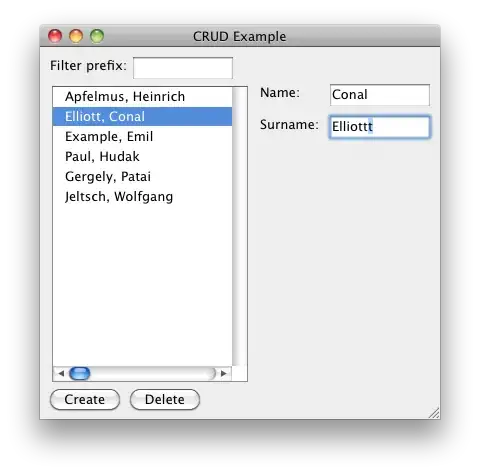

Here is the output of what this paragraph is showing me:

What is the best way to find the reason, why the tasks won't exit the queued state and run?

EDIT:

Out of curiousity I tried to trigger the DAG from within the WebUI and now both Runs executed (the one triggered from the WebUI failed, but that was expected, since there was no config set)