I want to get the new points on the new scale for PC1 and PC2.

I calculated the Eigenvalues, Eigenvectors and Contribution.

Now I want to calculate the points on the new scale (scores) to apply the K-Means cluster algorithm on them.

Whenever I try to calculate it by saying z_new = np.dot(v, vectors) (with v = np.cov(x)) I get a wrong score, which is [[14. -2. -2. -1. -0. 0. 0. -0. -0. 0. 0. -0. 0. 0.] for PC1 and [-3. -1. -2. -1. -0. -0. 0. 0. 0. -0. -0. 0. -0. -0.] for PC2. The right score scores (Calculated using SciKit's PCA() function) should be PC1: [ 4 4 -6 3 1 -5] and PC2: [ 0 -3 1 -1 5 -4]

Here is my code:

dataset = pd.read_csv("cands_dataset.csv")

x = dataset.iloc[:, 1:].values

m = x.mean(axis=1);

for i in range(len(x)):

x[i] = x[i] - m[i]

z = x / np.std(x)

v = np.cov(x)

values, vectors = np.linalg.eig(v)

d = np.diag(values)

p = vectors

z_new = np.dot(v, p) # <--- Here is where I get the new scores

z_new = np.round(z_new,0).real

print(z_new)

The result I get:

[[14. -2. -2. -1. -0. 0. 0. -0. -0. 0. 0. -0. 0. 0.]

[-3. -1. -2. -1. -0. -0. 0. 0. 0. -0. -0. 0. -0. -0.]

[-4. -0. 3. 3. 0. 0. 0. 0. 0. -0. -0. 0. -0. -0.]

[ 2. -1. -2. -1. 0. -0. 0. -0. -0. 0. 0. -0. 0. 0.]

[-2. -1. 8. -3. -0. -0. -0. 0. 0. -0. -0. -0. 0. 0.]

[-3. 2. -1. 2. -0. 0. 0. 0. 0. -0. -0. 0. -0. -0.]

[ 3. -1. -3. -1. 0. -0. 0. -0. -0. 0. 0. -0. 0. 0.]

[11. 6. 4. 4. -0. 0. -0. -0. -0. 0. 0. -0. 0. 0.]

[ 5. -8. 6. -1. 0. 0. -0. 0. 0. 0. 0. -0. 0. 0.]

[-1. -1. -1. 1. 0. -0. 0. 0. 0. 0. 0. 0. -0. -0.]

[ 5. 7. 1. -1. 0. -0. -0. -0. -0. 0. 0. -0. -0. -0.]

[12. -6. -1. 2. 0. 0. 0. -0. -0. 0. 0. -0. 0. 0.]

[ 3. 6. 0. 0. 0. -0. -0. -0. -0. 0. 0. 0. -0. -0.]

[ 5. 5. -0. -4. -0. -0. -0. -0. -0. 0. 0. -0. 0. 0.]]

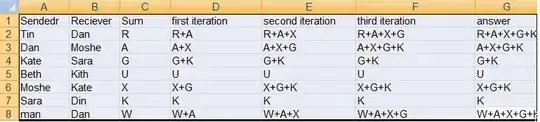

Dataset(Requested by a comment):