I was wondering if I could ask something about running slurm jobs in parallel.(Please note that I am new to slurm and linux and have only started using it 2 days ago...)

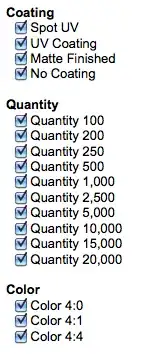

As per the insturctions on the picture below (source : https://hpc.nmsu.edu/discovery/slurm/serial-parallel-jobs/),

I have designed the following bash script

#!/bin/bash

#SBATCH --job-name fmriGLM #job name을 다르게 하기 위해서

#SBATCH --nodes=1

#SBATCH -t 16:00:00 # Time for running job

#SBATCH -o /scratch/connectome/dyhan316/fmri_preprocessing/FINAL_loop_over_all/output_fmri_glm.o%j #%j : job id 가 [>

#SBATCH -e /scratch/connectome/dyhan316/fmri_preprocessing/FINAL_loop_over_all/error_fmri_glm.e%j

pwd; hostname; date

#SBATCH --ntasks=30

#SBATCH --mem-per-cpu=3000MB

#SBATCH --cpus-per-task=1

for num in {0..29}

do

srun --ntasks=1 python FINAL_ARGPARSE_RUN.py --n_division 30 --start_num ${num} &

done

wait

The, I ran sbatch as follows: sbatch test_bash

However, when I view the outputs, it is apparent that only one of the sruns in the bash script are being executed... Could anyone tell me where I went wrong and how I can fix it?

**update : when I look at the error file I get the following : srun: Job 43969 step creation temporarily disabled, retrying. I searched the internet and it says that this could be caused by not specifying the memory and hence not having enough memory for the second job.. but I thought that I already specifeid the memory when I did --mem_per_cpu=300MB?

**update : I have tried changing the code as said as in here : Why are my slurm job steps not launching in parallel?, but.. still it didn't work

**potentially pertinent information: our node has about 96cores, which seems odd when compared to tutorials that say one node has like 4cores or something

Thank you!!