I am trying to find Euclidean distance between two points. I have around 13000 number of rows in Dataframe. I have to find Euclidean distance for each each row against all 13000 number of rows and then get the similarity scores for that. Running the code is more time consuming (more than 24 hrs).

Below is my code:

# Empty the existing database

df_similar = pd.DataFrame()

print(df_similar)

# 'i' refers all id's in the dataframe

# Length of df_distance is 13000

for i in tqdm(range(len(df_distance))):

df_50 = pd.DataFrame(columns=['id', 'id_match', 'similarity_distance'])

# in Order to avoid the duplicates we each time assign the "index" value with "i" so that we are starting the

# comparision from that index of "i" itself.

if i < len(df_distance):

index = i

# This loop is used to iterate one id with all 13000 id's. This is time consuming as we have to iterate each id against all 13000 id's

for j in (range(len(df_distance))):

# "a" is the id we are comparing with

a = df_distance.iloc[i,2:]

# "b" is the id we are selecting to compare with

b = df_distance.iloc[index,2:]

value = euclidean_dist(a,b)

# Create a temp dictionary to load the data into dataframe

dict = {

'id': df_distance['id'][i],

'id_match': df_distance['id'][index],

'similarity_distance':value

}

df_50 = df_50.append(dict,ignore_index=True)

# if the b values are less (nearer to the end of the array)

# in that case we reset the "index" value to 0 so as to continue the comparsision of "b" with "a".

if index == len(df_distance)-1:

index = 0

else:

index +=1

# Append the content of "df_50" into "df_similar" once for the iteration of "i"

df_similar = df_similar.append(df_50,ignore_index=True)

I guess more time consuming for me is in the for Loops.

Euclidean distance function I am using in my code.

from sklearn.metrics.pairwise import euclidean_distances

def euclidean_dist(a, b):

euclidean_val = euclidean_distances([a, b])

value = euclidean_val[0][1]

return value

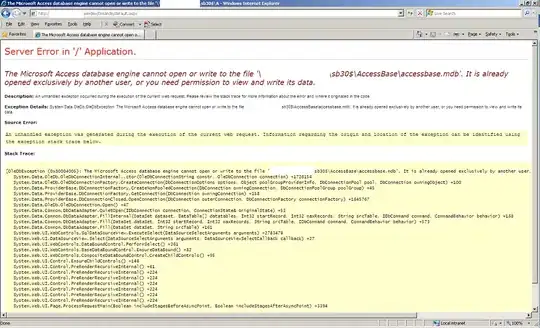

Sample df_distance data Note: In the image the values are scaled from column locality till end and we are using only this values to calculate the distance