Good day. I'm trying to identify the both printed and hand written text from the below check leaf

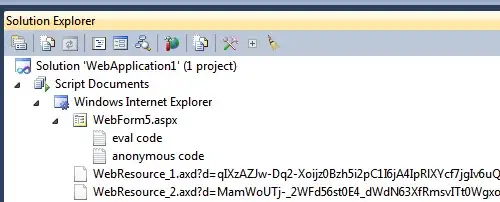

and here is the image after preprocessing, used below code

import cv2

import pytesseract

import numpy as np

img = cv2.imread('Images/cheque_leaf.jpg')

# Rescaling the image (it's recommended if you’re working with images that have a DPI of less than 300 dpi)

img = cv2.resize(img, None, fx=1.2, fy=1.2, interpolation=cv2.INTER_CUBIC)

h, w = img.shape[:2]

# By default OpenCV stores images in BGR format and since pytesseract assumes RGB format,

# we need to convert from BGR to RGB format/mode:

# it to reduce noise

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

thresh = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY+cv2.THRESH_OTSU)[1] # perform OTSU threhold

thresh = cv2.rectangle(thresh, (0, 0), (w, h), (0, 0, 0), 2) # draw a rectangle around regions of interest in an image

# Dilates an image by using a specific structuring element.

# enrich the charecters(to large)

kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (2,2))

# The function erodes the source image using the specified structuring element that determines

# the shape of a pixel neighborhood over which the minimum is taken

erode = cv2.erode(thresh, kernel, iterations = 1)

# To extract the text

custom_config = r'--oem 3 --psm 6'

pytesseract.image_to_string(thresh, config=custom_config)

and now using pytesseract.image_to_string() method to convert image to text. here I'm getting irrelavant output. In that above image I wanted to identify the date,branch payee,amount in both numbers and wordings and digital signature name followed by account number.

any OCR Techniques to solve the above problem by extract the exact data as mentioned above. Thanks in advance