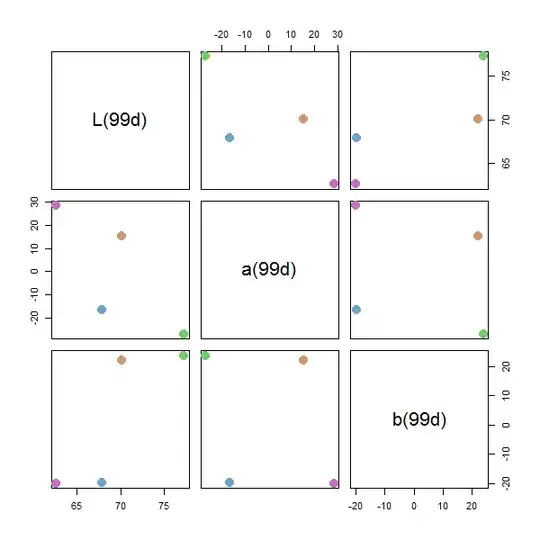

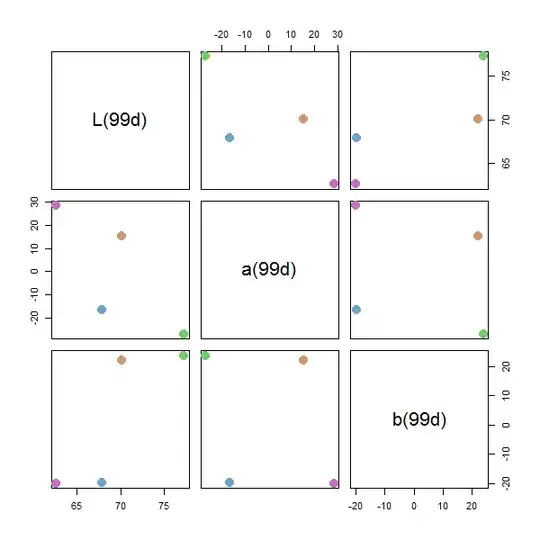

I would like to chime in with a different approach. My basic idea is convert the image from BGR to LAB color space and figure out if I can isolate the regions in blue. This can be done by focusing on the b-component of LAB, since it represents the color from yellow to blue.

Code

img = cv2.imread('image_path', cv2.IMREAD_UNCHANGED)

lab = cv2.cvtColor(img, cv2.COLOR_BGR2LAB)

b_component = lab[:,:,2]

(Note: The blue regions are actually quite darker such that it can be isolated easily.)

th = cv2.threshold(b_component,127,255,cv2.THRESH_BINARY_INV+cv2.THRESH_OTSU)[1]

But after applying threshold, the image contains some unwanted white pixels around the regions containing numeric text, which we do not want to consider.

To avoid the unwanted regions I tried out the following:

- Find contours above a certain area and draw each of them on 2-channel mask

- Mask out rectangular bounding box area for each contour.

- Locate pixels within that bounding box area that are 255 (white) on the threshold image

- Change those pixel values to white on the original PNG image.

In code below:

# finding contours

contours = cv2.findContours(th, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

contours = contours[0] if len(contours) == 2 else contours[1]

# initialize a mask of image shape and make copy of original image

black = np.zeros((img.shape[0], img.shape[1]), np.uint8)

res = img.copy()

# draw only contours above certain area on the mask

for c in contours:

area = cv2.contourArea(c)

if int(area) > 200:

cv2.drawContours(black, [c], 0, 255, -1)

If you see the following mask, it has enclosed all pixels within the contour in white. However, the pixels within the word "bar" should not be considered.

To isolate only the region with blue pixels, we perform "AND" operation with the threshold image th

mask = cv2.bitwise_and(th, th, mask = black)

We got the mask we actually want. The regions that are white in mask are made white in the copy of the original image res:

res[mask == 255] = (255, 255, 255, 255)

But the above image is not perfect. There are some regions still visible around the edges of the word foo.

In the following we dilate mask and repeat.

res = img.copy()

kernel_ellipse = cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (3,3))

dilate = cv2.dilate(mask, kernel_ellipse, iterations=1)

res[dilate == 255] = (255, 255, 255, 255)

Note: Using the A and B components of LAB color space you can isolate different colors quite easily, without having to spend time searching for the range. Colors with nearby shading and saturation can also be segmented.