A good website on how to fit longitudinal and growth models using lme4 is https://rpsychologist.com/r-guide-longitudinal-lme-lmer

As Robert pointed out, and as demonstrated on the website, it is often useful to fit an interaction between "time" and "group" (e.g., treatment vs. control), to see how the outcome changes for each group over time. You can see this change by looking at the coefficients, but it's usually easier to plot (adjusted) predictions.

Here's a toy example:

library(parameters)

library(datawizard)

library(lme4)

library(ggeffects)

data("qol_cancer")

# filter two time points

qol_cancer <- data_filter(qol_cancer, time %in% c(1, 2))

# create fake treatment/control variable

set.seed(123)

treatment <- sample(unique(qol_cancer$ID), size = length(unique(qol_cancer$ID)) / 2, replace = FALSE)

qol_cancer$treatment <- 0

qol_cancer$treatment[qol_cancer$ID %in% treatment] <- 1

qol_cancer$time <- as.factor(qol_cancer$time)

qol_cancer$treatment <- factor(qol_cancer$treatment, labels = c("control", "treatment"))

m <- lmer(QoL ~ time * treatment + (1 + time | ID),

data = qol_cancer,

control = lmerControl(check.nobs.vs.nRE = "ignore"))

model_parameters(m)

#> # Fixed Effects

#>

#> Parameter | Coefficient | SE | 95% CI | t(368) | p

#> ----------------------------------------------------------------------------------------

#> (Intercept) | 70.74 | 2.15 | [66.52, 74.97] | 32.90 | < .001

#> time [2] | 0.27 | 2.22 | [-4.10, 4.64] | 0.12 | 0.905

#> treatment [treatment] | 4.88 | 3.04 | [-1.10, 10.86] | 1.60 | 0.110

#> time [2] * treatment [treatment] | 1.95 | 3.14 | [-4.23, 8.13] | 0.62 | 0.535

#>

#> # Random Effects

#>

#> Parameter | Coefficient

#> ---------------------------------------

#> SD (Intercept: ID) | 15.14

#> SD (time2: ID) | 7.33

#> Cor (Intercept~time2: ID) | -0.62

#> SD (Residual) | 14.33

#>

#> Uncertainty intervals (equal-tailed) and p-values (two-tailed) computed

#> using a Wald t-distribution approximation.

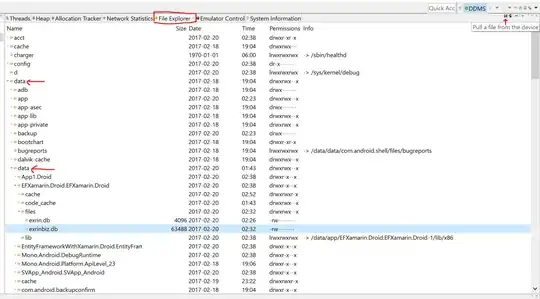

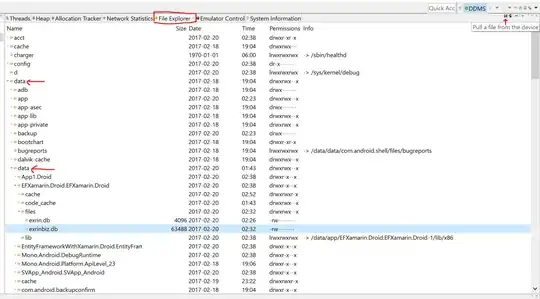

ggpredict(m, c("time", "treatment")) |> plot()

Regarding the statistical significance of the interaction term: the p-values from the summary might be misleading. If you're really interested in statistically significant differences either between time points, or between groups (treatment vs. control), it is recommended to calculate pairwise contrasts including p-values. You can do this, e.g., with the emmeans-package.

library(emmeans)

emmeans(m, c("time", "treatment")) |> contrast(method = "pairwise", adjust = "none")

#> contrast estimate SE df t.ratio p.value

#> time1 control - time2 control -0.266 2.22 186 -0.120 0.9049

#> time1 control - time1 treatment -4.876 3.04 186 -1.604 0.1105

#> time1 control - time2 treatment -7.092 2.89 316 -2.453 0.0147

#> time2 control - time1 treatment -4.610 2.89 316 -1.594 0.1118

#> time2 control - time2 treatment -6.826 2.73 186 -2.497 0.0134

#> time1 treatment - time2 treatment -2.216 2.22 186 -0.997 0.3199

#>

#> Degrees-of-freedom method: kenward-roger

Created on 2022-05-22 by the reprex package (v2.0.1)

Here you can see, e.g., that treatment and control do not differ regarding their QoL at time point 1, but they do at time point 2.