I have been working on project which involves extracting text from an image. I have researched that tesseract is one of the best libraries available and I decided to use the same along with opencv. Opencv is needed for image manipulation.

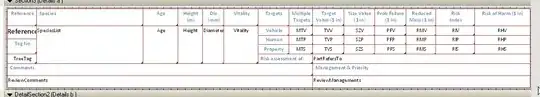

I have been playing a lot with tessaract engine and it does not seems to be giving the expected results to me. I have attached the image as an reference. Output I got is:

1] =501 [

Instead, expected output is

TM10-50%L

What I have done so far:

- Remove noise

- Adaptive threshold

- Sending it tesseract ocr engine

Are there any other suggestions to improve the algorithm?

Thanks in advance.

Snippet of the code:

import cv2

import sys

import pytesseract

import numpy as np

from PIL import Image

if __name__ == '__main__':

if len(sys.argv) < 2:

print('Usage: python ocr_simple.py image.jpg')

sys.exit(1)

# Read image path from command line

imPath = sys.argv[1]

gray = cv2.imread(imPath, 0)

# Blur

blur = cv2.GaussianBlur(gray,(9,9), 0)

# Binarizing

thres = cv2.adaptiveThreshold(blur, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY, 5, 3)

text = pytesseract.image_to_string(thresh)

print(text)

Images attached. First image is original image. Original image

Second image is what has been fed to tessaract. Input to tessaract