I'm struggling to re-implement and catch the results of one of the unsupervised anomaly detections, which are shown below:

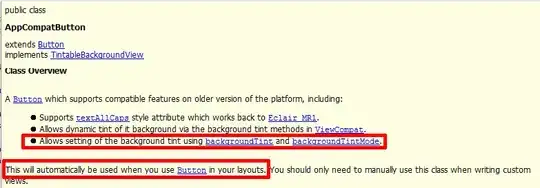

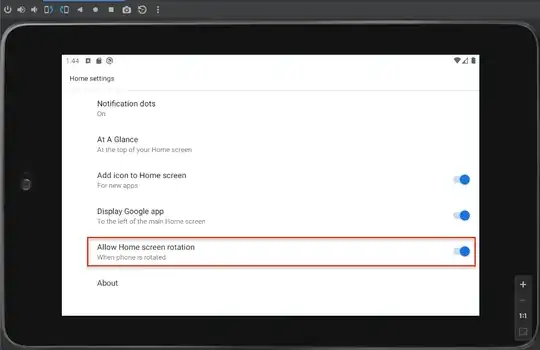

The credit of picture to this paper Histogram-based Outlier Score (HBOS): A fast

Unsupervised Anomaly Detection Algorithm by M. Goldstein & A. Dengel.

The credit of picture to this paper Histogram-based Outlier Score (HBOS): A fast

Unsupervised Anomaly Detection Algorithm by M. Goldstein & A. Dengel.

The Author of this paper, use 3 datasets which can be founded in this source easily including some info in Metadata tab.

#!pip install pyod

#from functions import auc_plot

import numpy as np

list_of_models = ['HBOS_pyod','KNN_pyod', 'KNN_sklearn','LOF_pyod', 'LOF_sklearn']

k = [5, 10, 20, 30, 40, 50, 60, 70,80, 90, 100]

#k = [3,5,6,7, 10, 20, 30, 40, 50, 60, 70]

#k = [3,5,6,7, 10,15, 25, 35, 45, 55, 65, 78, 87, 95, 99]

#k = np.arange(5, 100, step=10)

name_target = 'target'

contamination = 0.4

number_of_unique = None

auc_plot(df,name_target,contamination,number_of_unique,list_of_models,k)

I downloaded the breast cancer dataset from sklearn and applied those outlier detection algorithms from different packages like sklearn and pyod (e.g. HBOS), but I still couldn't reach this output which is shown in the above picture.

I'm suing this function for plotting so named functions.py

def auc_plot(df,name_target,contamination,number_of_unique,list_of_models,k):

from pyod.models.hbos import HBOS

from pyod.models.knn import KNN

from pyod.models.iforest import IForest

from pyod.models.lof import LOF

from sklearn.neighbors import KNeighborsClassifier

from xgboost import XGBClassifier

from sklearn.neighbors import LocalOutlierFactor

from sklearn.svm import OneClassSVM

from sklearn import metrics

orig = df.copy()

#bins = list(range(0,k+1))

predictions_list = []

if contamination > 0.5:

contamination = 0.5

X, y = df.loc[:, df.columns!= name_target], df[name_target]

seed = 120

test_size = 0.3

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=test_size, random_state=seed,stratify=y)

#print('X_test:',X_test.shape,'y_test:',y_test.shape)

#*************************************

if 'HBOS_pyod' in list_of_models:

predictions_1_j = []

auc_1_j = []

for j in range(len(k)):

model_name_1 = 'HBOS_pyod'

# train HBOS detector

clf_name = 'HBOS_pyod'

clf = HBOS(n_bins=k[j],contamination= contamination)

#start = time.time()

clf.fit(X_train)

# get the prediction on the test data

y_test_pred = clf.predict(X_test) # outlier labels (0 or 1)

y_test_scores_hbos = clf.decision_function(X_test) # outlier scores

predictions = [round(value) for value in y_test_pred]

for i in range(0,len(predictions)):

if predictions[i] > 0.5:

predictions[i]=1

else:

predictions[i]=0

predictions_1_j.append(predictions)

# #AUC score

auc_1 = metrics.roc_auc_score(y_test, predictions)

auc_1_j.append(auc_1)

#print('auc_1_j', auc_1_j)

#***********************************************

if 'KNN_pyod' in list_of_models:

from pyod.models.knn import KNN

predictions_2_j = []

auc_2_j = []

for j in range(len(k)):

model_name_2 = 'KNN_pyod'

# train kNN detector

clf_name = 'KNN_pyod'

clf = KNN(contamination= contamination,n_neighbors=k[j])

clf.fit(X_train)

# get the prediction on the test data

y_test_pred = clf.predict(X_test) # outlier labels (0 or 1)

y_test_scores_knn = clf.decision_function(X_test) # outlier scores

predictions = [round(value) for value in y_test_pred]

for i in range(0,len(predictions)):

if predictions[i] > 0.5:

predictions[i]=1

else:

predictions[i]=0

predictions_2_j.append(predictions)

# #AUC score

auc_2 = metrics.roc_auc_score(y_test, predictions)

auc_2_j.append(auc_2)

#print('auc_2_j', auc_2_j)

#****************************************************************LOF

if 'LOF_pyod' in list_of_models:

#print('******************************************************************LOF_pyod')

from pyod.models.lof import LOF

import time

predictions_4_j = []

auc_4_j = []

for j in range(len(k)):

model_name_4 = 'LOF_pyod'

# train LOF detector

clf_name = 'LOF_pyod'

clf = LOF(n_neighbors=k[j],contamination= contamination)

#start = time.time()

clf.fit(X_train)

# get the prediction on the test data

y_test_pred = clf.predict(X_test) # outlier labels (0 or 1)

y_test_scores_lof = clf.decision_function(X_test) # outlier scores

#****************************************

predictions = [round(value) for value in y_test_pred]

for i in range(0,len(predictions)):

if predictions[i] > 0.5:

predictions[i]=1

else:

predictions[i]=0

predictions_4_j.append(predictions)

# #AUC score

auc_4 = metrics.roc_auc_score(y_test, predictions)

auc_4_j.append(auc_4)

#print('auc_4_j', auc_4_j)

#****************************************************************XBOS

if 'XBOS' in list_of_models:

#print('******************************************************************XBOS')

import time

#df_2_exist = False

if number_of_unique != None:

df_2 = df.copy()

#remove columns with constant numbers or those columns with unique numbers of < number_of_unique

cols = df_2.columns

for i in range(len(cols)):

if cols[i] != name_target:

m = df_2[cols[i]].value_counts()

m = np.array(m)

if len(m) < number_of_unique:

print(f'len cols {i}:',len(m), 'droped')

#print('drope')

column_name = cols[i]

df_2=df_2.drop(columns= column_name)

X_2, y_2= df_2.loc[:, df_2.columns!= name_target], df_2[name_target]

X_train_2, X_test_2, y_train_2, y_test_2 = train_test_split(X_2, y_2, test_size=0.3, random_state=120,stratify=y_2)

predictions_5_j = []

auc_5_j = []

for j in range(len(k)):

model_name_5 = 'XBOS'

#create XBOS model

clf = xbosmodel.XBOS(n_clusters=k[j],max_iter=1)

#start = time.time()

# train XBOS model

clf.fit(X_train_2)

#predict model

y_test_pred = clf.predict(X_test_2)

y_test_scores_xbos = clf.fit_predict(X_test_2)

predictions = [round(value) for value in y_test_pred]

for i in range(0,len(predictions)):

if predictions[i] > 0.5:

predictions[i]=1

else:

predictions[i]=0

predictions_5_j.append(predictions)

# #AUC score

auc_5 = metrics.roc_auc_score(y_test, predictions)

auc_5_j.append(auc_5)

else:

predictions_5_j = []

auc_5_j = []

for j in range(len(k)):

model_name_5 = 'XBOS'

#create XBOS model

clf = xbosmodel.XBOS(n_clusters=k[j],max_iter=1)

start = time.time()

# train XBOS model

clf.fit(X_train)

#predict model

y_test_pred = clf.predict(X_test)

y_test_scores_xbos = clf.fit_predict(X_test)

predictions = [round(value) for value in y_test_pred]

for i in range(0,len(predictions)):

if predictions[i] > 0.5:

predictions[i]=1

else:

predictions[i]=0

predictions_5_j.append(predictions)

# #AUC score

auc_5 = metrics.roc_auc_score(y_test, predictions)

auc_5_j.append(auc_5)

#print('auc_5_j', auc_5_j)

#**********************************************************************KNN_sklearn

if 'KNN_sklearn' in list_of_models:

#print('*****************************************************************KNN from sklearn lib')

from sklearn.neighbors import KNeighborsClassifier

import time

predictions_6_j = []

auc_6_j = []

for j in range(len(k)):

model_name_6 = 'KNN_sklearn'

# train knn detector

neigh = KNeighborsClassifier(n_neighbors=k[j])

neigh.fit(X_train,y_train)

# get the prediction on the test data

y_test_pred_6 = neigh.predict(X_test)

#*****************************************************

predictions = [round(value) for value in y_test_pred_6]

for i in range(0,len(predictions)):

if predictions[i] > 0.5:

predictions[i]=1

else:

predictions[i]=0

predictions_6_j.append(predictions)

# #AUC score

auc_6 = metrics.roc_auc_score(y_test, predictions)

auc_6_j.append(auc_6)

#print('auc_6_j', auc_6_j)

#**********************************************************

if 'LOF_sklearn' in list_of_models:

#print('*****************************************************************LOF from sklearn lib')

from sklearn.neighbors import LocalOutlierFactor

import time

predictions_9_j = []

auc_9_j = []

for j in range(len(k)):

model_name_9 = 'LOF_sklearn'

# train knn detector

neigh = LocalOutlierFactor(n_neighbors=k[j],novelty=True, contamination=contamination)

start = time.time()

neigh.fit(X_train)

# get the prediction on the test data

y_test_pred_9 = neigh.predict(X_test)

#*****************************************************

predictions = [round(value) for value in y_test_pred_9]

for i in range(0,len(predictions)):

if predictions[i] > 0.5:

predictions[i]=1

else:

predictions[i]=0

predictions_9_j.append(predictions)

# #AUC score

auc_9 = metrics.roc_auc_score(y_test, predictions)

auc_9_j.append(auc_9)

#print(auc_1_j)

if 'HBOS_pyod' in list_of_models:

plt.plot(k,auc_1_j,marker='.',label="HBOS_pyod")

if 'KNN_pyod' in list_of_models:

plt.plot(k,auc_2_j,marker='.',label="KNN_pyod")

if 'LOF_pyod' in list_of_models:

plt.plot(k,auc_4_j,marker='.',label="LOF_pyod")

if 'XBOS' in list_of_models:

plt.plot(k,auc_5_j,marker='.',label="XBOS")

if 'KNN_sklearn' in list_of_models:

plt.plot(k,auc_6_j,marker='.',label="KNN_sklearn")

if 'LOF_sklearn' in list_of_models:

plt.plot(k,auc_9_j,marker='.',label="LOF_sklearn")

plt.title('ROC-Curve')

plt.ylabel('AUC')

plt.xlabel('K')

#plt.axis([0, 15, 0., 1.0])

#plt.xlim(k)

plt.xticks(np.arange(0, 100.005, 20))

plt.yticks(np.arange(0, 1.005, step=0.05)) # Set label locations

plt.ylim(0.0, 1.01)

#plt.legend(loc=0)

plt.legend(bbox_to_anchor=(1.04,1), loc="upper left")

plt.show()

Download breast cancer wisconsin dataset from sklearn:

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

from sklearn.metrics import accuracy_score

from sklearn.metrics import confusion_matrix

from sklearn.metrics import classification_report

import time

from sklearn import metrics

from sklearn.datasets import load_breast_cancer

Bw = load_breast_cancer(

return_X_y=False,

as_frame=True)

df = Bw.frame

name_target = 'target'

#change types of feature columns

#df['duration']=df['duration'].astype(float)

#df['src_bytes']=df['src_bytes'].astype(float)

#df['dst_bytes']=df['dst_bytes'].astype(float)

num_row , num_colmn = df.shape

#calculate number of classes

classes = df[name_target].unique()

num_class = len(classes)

print(df[name_target].value_counts())

#determine which class is normal (is not anomaly)

label = np.array(df[name_target])

a,b = np.unique(label , return_counts=True)

#print("a is:",a)

#print("b is:",b)

for i in range(len(b)):

if b[i]== b.max():

normal = a[i]

#print('normal:', normal)

elif b[i] == b.min():

unnormal = a[i]

#print('unnorm:' ,unnormal)

# show anomaly classes

anomaly_class = []

for f in range(len(a)):

if a[f] != normal:

anomaly_class.append(a[f])

# convert dataset classes to 2 classe: normal and unnormal

label = np.where(label != normal, unnormal ,label)

df[name_target]=label

# showing columns's type: numerical or categorical

numeric =0

categoric = 0

for i in range(df.shape[1]):

df_col = df.iloc[:,i]

if df_col.dtype == int and df.columns[i] != name_target:

numeric +=1

elif df_col.dtype == float and df.columns[i] != name_target:

numeric += 1

elif df.columns[i] != name_target:

categoric += 1

#replace labels with 0 and 1

label = np.where(label == normal, 0 ,1)

df[name_target]=label

# null_check: if more than half of a column was null, then that columns will be droped

# otherwise if number of null was less than half of column, then nulls will replace with mean of that column

test = []

for i in range(df.shape[1]):

if df.iloc[:,i].isnull().sum() > df.shape[0]//2:

test.append(i)

elif df.iloc[:,i].isnull().sum() < df.shape[0]//2 and df.iloc[:,i].isnull().sum() != 0:

m = df.iloc[:,i].mean()

df.iloc[:,i] = df.iloc[:,i].replace(to_replace = np.nan, value = m)

df = df.drop(columns=df.columns[test])

#calculate anomaly rate

b = df[name_target].value_counts()

Anomaly_rate= b[1] / (b[0]+b[1])

print('=============Anomaly_rate=================')

print(Anomaly_rate)

contamination= float("{:.4f}".format(Anomaly_rate))

print('=============contamination=================')

print(contamination)

#rename labels column

df = df.rename(columns = {'labels' : 'binary_target'})

#df.to_csv(f'/content/{dataset_name}.csv', index = False)

I checked this post wasn't useful for this question to get the plot. So far my output is following:

Please note that this ROC plot is over different K (number of nearest neighbours).

update: I provided with Google colab notebook to troubleshoot faster if someone is interested in running the code.