Assume we want to select an image file using PyQt5, read the image using OpenCV, execute cleanPlate with the loaded image, and display some result in the UI of PyQt5.

Design a simple "Form" using Qt Designer.

For example, the form has two buttons and a label (the label is used for showing an image).

When "Open" button is pressed, read the image to self.img using OpenCV:

filename = QFileDialog.getOpenFileName(self, "Open files", "", "Only Support(*.jpg *.png *.xmp)")

self.img = cv2.imread(filename[0])

When "Clean Plate" button is pressed, execute the algorithm using OpenCV.

Use self.img as input image to the algorithm.

Prepare the result to be shown in the UI using OpenCV.

Convert the image from NumPy array to QPixmap, and update the label to show the image.

Example:

# Get the label for showing new_image in the UI.

label = self.findChild(QLabel, 'newImageLabel')

# Draw the contour on self.img (store the result in new NumPy array named "imgae")

image = cv2.drawContours(self.img.copy(), [location], 0, (0, 255, 0), 4)

# Resize image to fit to the dimensions of label (this is just an example - we may also choose to modify the label size).

image = cv2.resize(image, [label.width(), label.height()], interpolation=cv2.INTER_AREA)

# Convert new image color format to BGRA (assume Qt preferred color format is BGRA).

image = cv2.cvtColor(image, cv2.COLOR_BGR2BGRA)

# Convert new_image from NumPy array to QPixmap (Format_ARGB32 applies BGRA color format).

qimage = QImage(image, image.shape[1], image.shape[0], image.strides[0], QImage.Format_ARGB32)

qpix = QPixmap(qimage)

label.setPixmap(qpix)

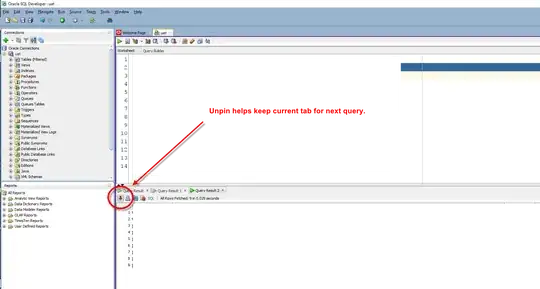

Example for OpenCV result showed in the UI:

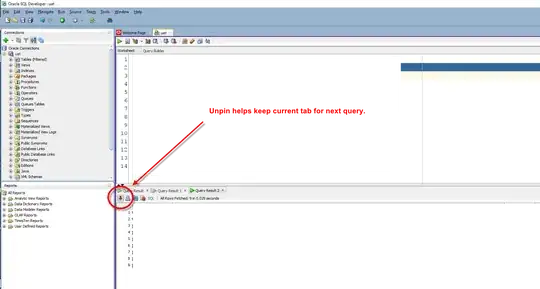

Sample Form in Qt Designer:

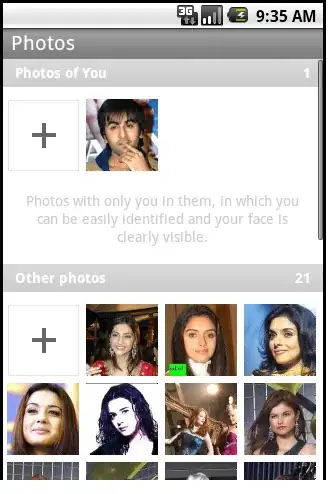

Sample input image (plate1.jpg):

Complete code sample:

import cv2

import numpy as np

import imutils

import pytesseract as tess

from matplotlib import pyplot as plt

from PyQt5.QtWidgets import QApplication, QWidget, QPushButton, QLineEdit, QFileDialog, QLabel

from PyQt5.QtGui import QImage, QPixmap

from PyQt5 import uic

import sys

tess.pytesseract.tesseract_cmd = r'C:\Program Files\Tesseract-OCR\tesseract.exe' # I am using Windows

class UI(QWidget):

def __init__(self):

super().__init__()

uic.loadUi("GUI.ui", self)

button = self.findChild(QPushButton, 'openPushButton')

button.clicked.connect(self.open)

button2 = self.findChild(QPushButton,'cleanPlatePushButton')

button2.clicked.connect(self.cleanPlate)

self.img = None # Initialize the image to None

def open(self):

filename = QFileDialog.getOpenFileName(self, "Open files", "", "Only Support(*.jpg *.png *.xmp)")

#self.lineEdit.setText(filename[0])

#global userinput

#userinput = self.lineEdit.text()

# Read the selected image from file into the NumPy array self.img (OpenCV applies BGR pixel format).

self.img = cv2.imread(filename[0])

def cleanPlate(self):

# Use self.img as input to the "Clean Plate" algorithm

gray_img = cv2.cvtColor(self.img, cv2.COLOR_BGR2GRAY)

bfilter = cv2.bilateralFilter(gray_img, 11, 17, 17)

edged = cv2.Canny(bfilter, 30, 200)

keypoints = cv2.findContours(edged.copy(), cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

contours = imutils.grab_contours(keypoints)

contours = sorted(contours, key=cv2.contourArea, reverse=True)[:10]

location = None

for contour in contours:

approx = cv2.approxPolyDP(contour, 10, True)

if len(approx) == 4:

location = approx

break

# Maskng plate

mask = np.zeros(gray_img.shape, np.uint8)

new_image = cv2.drawContours(mask, [location], 0, 255, -1)

(x,y) = np.where(mask==255)

(x1, y1) = (np.min(x), np.min(y))

(x2, y2) = (np.max(x), np.max(y))

cropped_image = gray_img[x1:x2+1, y1:y2+1]

text = tess.image_to_string(cropped_image, lang='eng')

print("Number Detected Plate Text : ",text)

# Get the label for showing new_image in the UI.

label = self.findChild(QLabel, 'newImageLabel')

# Draw the contour on self.img (store the result in new NumPy array named "imgae")

image = cv2.drawContours(self.img.copy(), [location], 0, (0, 255, 0), 4)

# Resize image to fit to the dimensions of label (this is just an example - we may also choose to modify the label size).

image = cv2.resize(image, [label.width(), label.height()], interpolation=cv2.INTER_AREA)

# Convert new image color format to BGRA (assume Qt preferred color format is BGRA).

image = cv2.cvtColor(image, cv2.COLOR_BGR2BGRA)

# https://stackoverflow.com/questions/34232632/convert-python-opencv-image-numpy-array-to-pyqt-qpixmap-image

# Convert new_image from NumPy array to QPixmap (Format_ARGB32 applies BGRA color format).

qimage = QImage(image, image.shape[1], image.shape[0], image.strides[0], QImage.Format_ARGB32)

qpix = QPixmap(qimage)

label.setPixmap(qpix)

app = QApplication([])

window = UI()

window.show()

app.exec_()

Content of GUI.ui XML file:

<?xml version="1.0" encoding="UTF-8"?>

<ui version="4.0">

<class>Form</class>

<widget class="QWidget" name="Form">

<property name="geometry">

<rect>

<x>0</x>

<y>0</y>

<width>450</width>

<height>294</height>

</rect>

</property>

<property name="windowTitle">

<string>Form</string>

</property>

<widget class="QPushButton" name="openPushButton">

<property name="geometry">

<rect>

<x>10</x>

<y>10</y>

<width>81</width>

<height>31</height>

</rect>

</property>

<property name="text">

<string>Open</string>

</property>

</widget>

<widget class="QPushButton" name="cleanPlatePushButton">

<property name="geometry">

<rect>

<x>10</x>

<y>50</y>

<width>81</width>

<height>31</height>

</rect>

</property>

<property name="text">

<string>Clean Plate</string>

</property>

</widget>

<widget class="QLabel" name="newImageLabel">

<property name="geometry">

<rect>

<x>110</x>

<y>20</y>

<width>320</width>

<height>240</height>

</rect>

</property>

<property name="text">

<string/>

</property>

</widget>

</widget>

<resources/>

<connections/>

</ui>