Overview

This answer provides probable explanations. Put it shortly, all parallel workload does not infinitely scale. When many cores compete for the same shared resource (eg. DRAM), using too many cores is often detrimental because there is a point where there are enough cores to saturate a given shared resource and using more core only increase the overheads.

More specifically, in your case, the L3 cache and the IMCs are likely the problem. Enabling Sub-NUMA Clustering and non-temporal prefetch should improve a bit the performances and the scalability of your benchmark. Still, there are other architectural hardware limitations that can cause the benchmark not to scale well. The next section describes how Intel Skylake SP processors deal with memory accesses and how to find the bottlenecks.

Under the hood

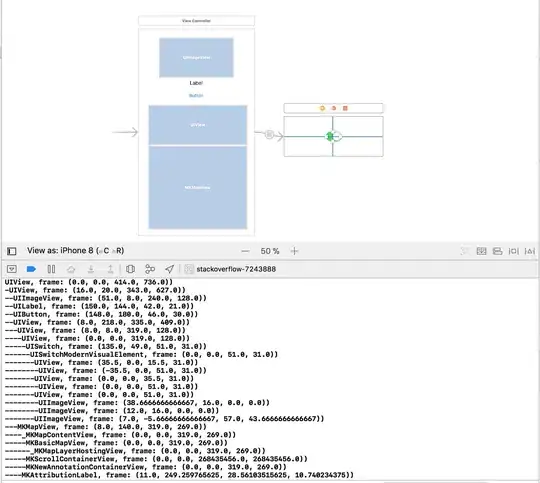

The layout of Intel Xeon Skylake SP processors is like the following in your case:

Source: Intel

There are two sockets connected with an UPI interconnect and each processor is connected to its own set of DRAM. There are 2 Integrated Memory Controller (IMC) per processor and each is connected to 3 DDR4 DRAM @ 2666MHz. This means the theoretical bandwidth is 2*2*3*2666e6*8 = 256 GB/s = 238 GiB/s.

Assuming your benchmark is well designed and each processor access only to its NUMA node, I expect a very low UPI throughput and a very low number of remote NUMA pages. You can check this with hardware counters. Linux perf or VTune enable you to check this relatively easily.

The L3 cache is split in slices. All physical addresses are distributed across the cache slices using an hash function (see here for more informations). This method enable the processor to balance the throughput between all the L3 slices. This method also enable the processor to balance the throughput between the two IMCs so that in-fine the processor looks like a SMP architecture instead of a NUMA one. This was also use in Sandy Bridge and Xeon Phi processors (mainly to mitigate NUMA effects).

Hashing does not guarantee a perfect balancing though (no hash function is perfect, especially the ones that are fast to compute), but it is often quite good in practice, especially for contiguous accesses. A bad balancing decreases the memory throughput due to partial stalls. This is one reason you cannot reach the theoretical bandwidth.

With a good hash function, the balancing should be independent of the number of core used. If the hash function is not good enough, one IMC can be more saturated than the other one oscillating over time. The bad news is that the hash function is undocumented and checking this behaviour is complex: AFAIK you can get hardware counters for the each IMC throughput but they have a limited granularity which is quite big. On my Skylake machine the name of the hardware counters are uncore_imc/data_reads/ and uncore_imc/data_writes/ but on your platform you certainly have 4 counters for that (one for each IMC).

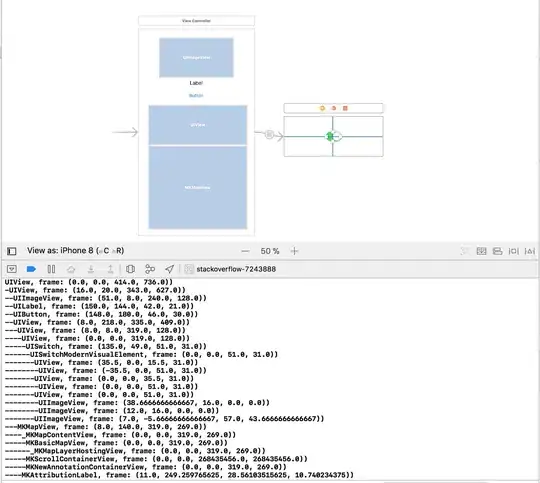

Fortunately, Intel provides a feature called Sub-NUMA Clustering (SNC) on Xeon SP processors like your. The idea is to split the processor in two NUMA nodes that have their own dedicated IMC. This solve the balancing issue due to the hash function and so result in faster memory operations as long as your application is NUMA-friendly. Otherwise, it can actually be significantly slower due to NUMA effects. In the worst case, the pages of an application can all be mapped to the same NUMA node resulting in only half the bandwidth being usable. Since your benchmark is supposed to be NUMA-friendly, SNC should be more efficient.

Source: Intel

Furthermore, having more cores accessing the L3 in parallel can cause more early evictions of prefetched cache lines which need to be fetched again later when the core actual need them (with an additional DRAM latency time to pay). This effect is not as unusual as it seems. Indeed, due to the high latency of DDR4 DRAMs, hardware prefetching units have to prefetch data a long time in advance so to reduce the impact of the latency. They also need to perform a lot of requests concurrently. This is generally not a problem with sequential accesses, but more cores causes accesses to look more random from the caches and IMCs point-of-view. The thing is DRAM are designed so that contiguous accesses are faster than random one (multiple contiguous cache lines should be loaded consecutively to fully saturate the bandwidth). You can analyse the value of the LLC-load-misses hardware counter to check if more data are re-fetched with more threads (I see such effect on my Skylake-based PC with only 6-cores but it is not strong enough to cause any visible impact on the final throughput). To mitigate this problem, you can use software non-temporal prefetch (prefetchnta) to request the processor to load data directly into the line fill buffer instead of the L3 cache resulting in a lower pollution (here is a related answer). This may be slower with fewer cores due to a lower concurrency, but it should be a bit faster with a lot of cores. Note that this does not solve the problem of having fetched address that looks more random from the IMCs point-of-view and there is not much to do about that.

The low-level architecture DRAM and caches is very complex in practice. More information about memory can be found in the following links: