I'm writing signal source for digital signal processing and found strange behaviour. Here is the code:

float complex *output = malloc(sizeof(float complex) * 48000);

FILE *f = fopen("test_signal.cf32", "wb");

int64_t freq = -3355;

float phase_increment = 2 * (float) M_PI * (float) freq / 48000;

float phase = 0.0F;

for (int i = 0; i < 150 * 5; i++) {

for (int j = 0; j < 9600; j++) {

output[j] = cosf(phase) + sinf(phase) * I;

phase += phase_increment;

}

fwrite(output, sizeof(float complex), 9600, f);

}

fclose(f);

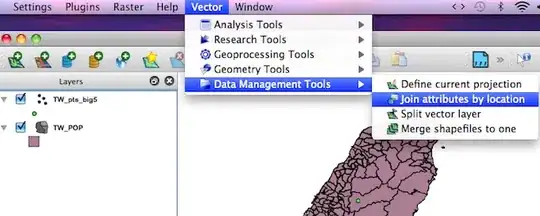

It will create a file with complex signal shifted by -3355hz from the centre. So when I run FFT over this file I expect a signal at -3355hz. With some minor frequency distribution around that number due to quantisation. But in reality I'm getting the following:

There is a quite significant frequency jump (~2000hz) around 50 seconds.

Anyone knows why?

My experiments showed:

- using double for the

phasehelps - doing argument reduction from -2pi to 2pi helps

- Apple M1 and raspberry pi have the same jump

- there seems to be no overflow in the

phaseargument - Fun reading https://randomascii.wordpress.com/2014/10/09/intel-underestimates-error-bounds-by-1-3-quintillion/ but unlikely causing sudden jump of a frequency