The background: I am writing some terrain visualiser and I am trying to decouple the rendering from the terrain generation.

At the moment, the generator returns some array of triangles and colours, and these are bound in OpenGL by the rendering code (using OpenTK).

So far I have a very simple shader which handles the rotation of the sphere.

The problem: I would like the application to be able to display the results either as a 3D object, or as a 2D projection of the sphere (let's assume Mercator for simplicity). I had thought, this would be simple — I should compile an alternative shader for such cases. So, I have a vertex shader which almost works:

precision highp float;

uniform mat4 projection_matrix;

uniform mat4 modelview_matrix;

in vec3 in_position;

in vec3 in_normal;

in vec3 base_colour;

out vec3 normal;

out vec3 colour2;

vec3 fromSphere(in vec3 cart)

{

vec3 spherical;

spherical.x = atan(cart.x, cart.y) / 6;

float xy = sqrt(cart.x * cart.x + cart.y * cart.y);

spherical.y = atan(xy, cart.z) / 4;

spherical.z = -1.0 + (spherical.x * spherical.x) * 0.1;

return spherical;

}

void main(void)

{

normal = vec3(0,0,1);

normal = (modelview_matrix * vec4(in_normal, 0)).xyz;

colour2 = base_colour;

//gl_Position = projection_matrix * modelview_matrix * vec4(fromSphere(in_position), 1);

gl_Position = vec4(fromSphere(in_position), 1);

}

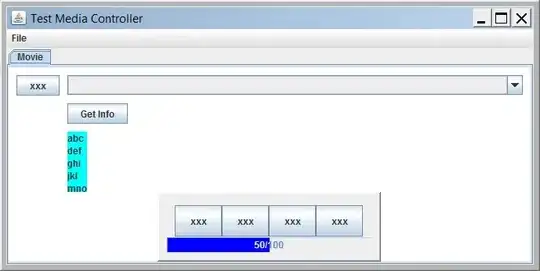

However, it has a couple of obvious issues (see images below)

- Saw-tooth pattern where triangle crosses the cut meridian

- Polar region is not well defined

3D case (Typical shader):

2D case (above shader)

Both of these seem to reduce to the statement "A triangle in 3-dimensional space is not always even a single polygon on the projection". (... and this is before any discussion about whether great circle segments from the sphere are expected to be lines after projection ...).

(the 1+x^2 term in z is already a hack to make it a little better - this ensures the projection not flat so that any stray edges (ie. ones that straddle the cut meridian) are safely behind the image).

The question: Is what I want to achieve possible with a VertexShader / FragmentShader approach? If not, what's the alternative? I think I can re-write the application side to pre-transform the points (and cull / add extra polygons where needed) but it will need to know where the cut line for the projection is — and I feel that this information is analogous to the modelViewMatrix in the 3D case... which means taking this logic out of the shader seems a step backwards.

Thanks!