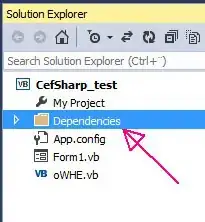

I'm having a terrible time figuring out a way to better-handle the seams between 3D tile objects in my game engine. You only see them when the camera is tilted down at a far enough angle like this... I do not believe it is a texture problem or a texture rendering problem (but I could be wrong).

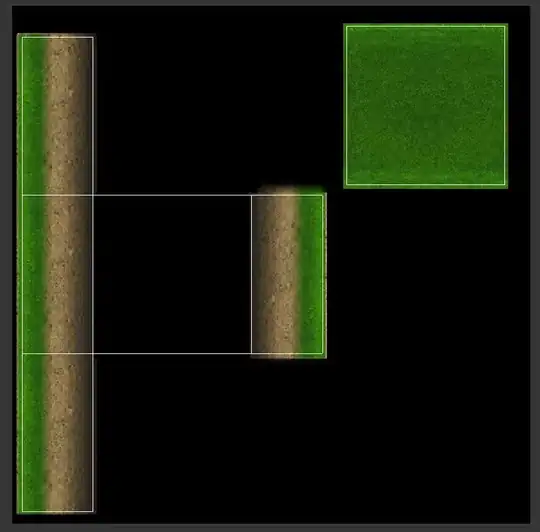

Below are two screenshots - the first one demonstrates the problem, while the second is the UV wrapping I'm using for the tiles in Blender. I'm providing room in the UVs for overlap, such that if the texture needs to overdraw during smaller mip-maps, I should still be good. I am loading textures with the following texture params:

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

It appears to me that the sides of the 3D tiles are slightly being drawn, and you especially notice the artifact due to the lighting angle (directional) that is being applied from this angle.

Are there any tricks or things I can check to eliminate this effect? I am rendering in "layers", but within those layers based on camera distance (furthest away first). All of these objects are in the same layer. Any ideas would be greatly appreciated!

If useful, this is a project for iPhone/iPad using OpenGLES2.0. I'm happy to provide any code snippets - just let me know what might be a good place to start.

UPDATE WITH VERTEX/PIXEL SHADER & MODEL VERTICES

Presently, I am using PowerVR's POD format to store model data exported from Blender (via Collada then Collada2Pod converter by PowerVR). Here's the GL_SHORT vertex coords (model space):

64 -64 32

64 64 32

-64 64 32

-64 -64 32

64 -64 -32

-64 -64 -32

-64 64 -32

64 64 -32

64 -64 32

64 -64 -32

64 64 -32

64 64 32

64 64 32

64 64 -32

-64 64 -32

-64 64 32

-64 64 32

-64 64 -32

-64 -64 -32

-64 -64 32

64 -64 -32

64 -64 32

-64 -64 32

-64 -64 -32

So everything should be perfectly flush, I would expect. Here's the shaders:

attribute highp vec3 inVertex;

attribute highp vec3 inNormal;

attribute highp vec2 inTexCoord;

uniform highp mat4 ProjectionMatrix;

uniform highp mat4 ModelviewMatrix;

uniform highp mat3 ModelviewITMatrix;

uniform highp vec3 LightColor;

uniform highp vec3 LightPosition1;

uniform highp float LightStrength1;

uniform highp float LightStrength2;

uniform highp vec3 LightPosition2;

uniform highp float Shininess;

varying mediump vec2 TexCoord;

varying lowp vec3 DiffuseLight;

varying lowp vec3 SpecularLight;

void main()

{

// transform normal to eye space

highp vec3 normal = normalize(ModelviewITMatrix * inNormal);

// transform vertex position to eye space

highp vec3 ecPosition = vec3(ModelviewMatrix * vec4(inVertex, 1.0));

// initalize light intensity varyings

DiffuseLight = vec3(0.0);

SpecularLight = vec3(0.0);

// Run the directional light

PointLight(true, normal, LightPosition1, ecPosition, LightStrength1);

PointLight(true, normal, LightPosition2, ecPosition, LightStrength2);

// Transform position

gl_Position = ProjectionMatrix * ModelviewMatrix * vec4(inVertex, 1.0);

// Pass through texcoords and filter

TexCoord = inTexCoord;

}