I have the following problem.

I need to build a very large number of definitions(*) such as

f[{1,0,0,0}] = 1

f[{0,1,0,0}] = 2

f[{0,0,1,0}] = 3

f[{0,0,0,1}] = 2

...

f[{2,3,1,2}] = 4

...

f[{n1,n2,n3,n4}] = some integer

...

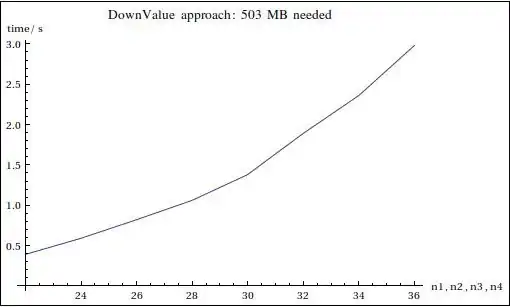

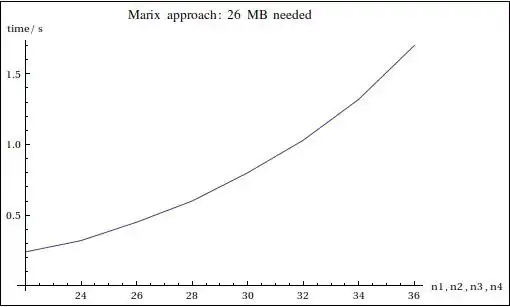

This is just an example. The length of the argument list does not need to be 4 but can be anything. I realized that the lookup for each value slows down with exponential complexity when the length of the argument list increases. Perhaps this is not so strange, since it is clear that in principle there is a combinatorial explosion in how many definitions Mathematica needs to store.

Though, I have expected Mathematica to be smart and that value extract should be constant time complexity. Apparently it is not.

Is there any way to speed up lookup time? This probably has to do with how Mathematica internally handles symbol definition lookups. Does it phrases the list until it finds the match? It seems that it does so.

All suggestions highly appreciated. With best regards Zoran

(*) I am working on a stochastic simulation software that generates all configurations of a system and needs to store how many times each configuration occurred. In that sense a list {n1, n2, ..., nT} describes a particular configuration of the system saying that there are n1 particles of type 1, n2 particles of type 2, ..., nT particles of type T. There can be exponentially many such configurations.