I am trying to integrate orbits of stars using a Velocity-Verlet integrator inside a potential. However, my current algorithm takes around 25 seconds per orbit, I aim to integrate around 1000 orbits which would take around 7 hours. Therefore I hope someone can help me to optimize the algorithm:

The Velocity-Verlet integrator is defined as (see verlet_integration() for an implementation):

I want to integrate orbits using a Simple Logarithmic Potential (SLP) defined as:

The value v_0 is always equal to 1 and the value q can either be 0.7 or 0.9 (see SLP()).

By using this potential, the acceleration can be calculated which is necessary for this integrator (see apply_forces())

After this I choose x = 0 as a starting value for all orbits, and the energy E = 0 as well. Using that starting values for vx can be calculated (see calc_vx())

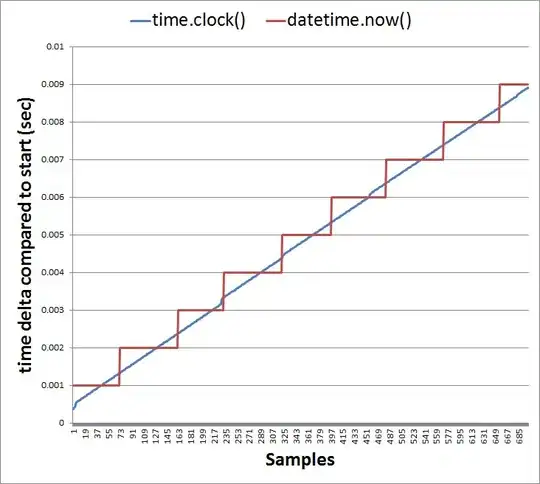

To have orbits that are accurate enough, the time step needs to be 1E-4 or smaller. I need to analyze these orbits later, for that they need to be sufficiently long enough, thus I integrate between t=0 and t=200.

I need to calculate values for the entire allowed phase space of (y, vy). The allowed phase space is where the method calc_vx() doesn't result in a square root of a negative number. Thus for that a large number of orbits need to be integrated. I hope to be able to calculate at least 1000 values. But 10,000 would definitively be more ideal. Although maybe that's asking for too much.

If you have any ideas for performance improvements, please let me know. Using another language is not an option, so please don't suggest that.

An example of how these orbits look can be seen here:

Everything necessary to run the code should be found below, as well as starting values to run it.

UPDATE: I have implemented the suggestions by mivkov, this reduced the time to 9 seconds, 3 seconds faster, but it's still quite slow. Any other suggestions are still welcome

import numpy as np

def calc_vx(y, vy, q):

"""

Calculate starting value of x velocity

"""

vx2 = -np.log((y / q) ** 2) - vy ** 2

return np.sqrt(vx2)

def apply_forces(x, y, q):

"""

Apply forces to determine the accelerations

"""

Fx = -x / (y ** 2 / q ** 2 + x ** 2)

Fy = -y / (q ** 2 * x ** 2 + y ** 2)

return Fx, Fy

def verlet_integration(start, dt, steps, q):

# initialise an array and set the first value to the starting value

vals = np.zeros((steps, *np.shape(start)))

vals[0] = start

# run through all elements and apply the integrator to each value

for i in range(steps - 1):

x_vec, v_vec, a_vec = vals[i]

new_x_vec = x_vec + dt * (v_vec + 0.5 * a_vec * dt)

new_a_vec = apply_forces(*new_x_vec, q)

new_v_vec = v_vec + 0.5 * (a_vec + new_a_vec) * dt

vals[i + 1] = new_x_vec, new_v_vec, new_a_vec

# I return vals.T so i can use the following to get arrays for the position, velocity and acceleration

# ((x, vx, ax), (y, vy, ay)) = verlet_integration_vec( ... )

return vals.T

def integration(y, vy, dt, t0, t1, q):

# calculate the starting values

vx = calc_vx(y, vy, q)

ax, ay = apply_forces(0, y, q)

start = [(0, y), (vx, vy), (ax, ay)]

steps = round((t1 - t0) / dt) # bereken het aantal benodigde stappen

e = verlet_integration(start, dt, steps, q) # integreer

return e

((x, vx, ax), (y, vy, ay)) = integration(0.1, 0.2, 1E-4, 0, 100, 0.7)