I am downloading CMIP6 data files using this code:

#install.packages("epwshiftr")

library("epwshiftr")

#this indexes all the information about the models

test = init_cmip6_index(activity = "CMIP",

variable = 'pr',

frequency = 'day',

experiment = c("historical"),

source = NULL,

years= c(1981,1991,2001,2014),

variant = "r1i1p1f1" , replica = F,

latest = T,

limit = 10000L,data_node = NULL,

resolution = NULL

)

#Download gcms#

ntest=nrow(test)

for(i in 1:ntest){

url<-test$file_url[i]

destfile<-paste("D:/CMIP6 data/Data/",test$source_id[i],"-",test$experiment_id[i],"-",test$member_id[i],"-",test$variable_id[i],"-",test$datetime_start[i],"to",test$datetime_end[i],".nc",sep="")

download.file(url,destfile)

}

The files are very large, and it will take a few hours, and I am encountering some time-outs so I may need to run this code multiple times to finish downloading all the files.

Is there a way to code it such that it checks if the specific file name already exists, and if it does, it will skip that file and move on to the next one?

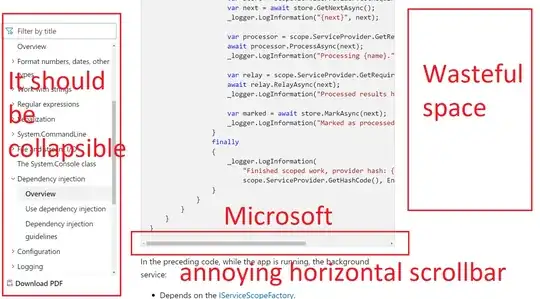

For reference, the files look like this when they are downloaded:

Any help would be appreciated. Thank you!

EDIT: Would it also be possible for the code to not completely stop incase the URL of a particular file is not responding? This is because I noticed that some URLs take too long to respond, and R decides to time-out the operation after waiting for a certain period of time.