Problem

We are trying to implement a program that sends commands to a robot in a given cycle time. Thus this program should be a real-time application. We set up a pc with a preempted RT Linux kernel and are launching our programs with chrt -f 98 or chrt -rr 99 to define the scheduling policy and priority. Loading of the kernel and launching of the program seems to be fine and work (see details below).

Now we were measuring the time (CPU ticks) it takes our program to be computed. We expected this time to be constant with very little variation. What we measured though, were quite significant differences in computation time. Of course, we thought this could be undefined behavior in our rather complex program, so we created a very basic program and measured the time as well. The behavior was similarly bad.

Question

- Why are we not measuring a (close to) constant computation time even for our basic program?

- How can we solve this problem?

Environment Description

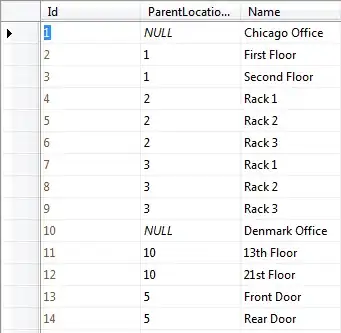

First of all, we installed an RT Linux Kernel on the PC using this tutorial. The main characteristics of the PC are:

| PC Characteristics | Details |

|---|---|

| CPU | Intel(R) Atom(TM) Processor E3950 @ 1.60GHz with 4 cores |

| Memory RAM | 8 GB |

| Operating System | Ubunut 20.04.1 LTS |

| Kernel | Linux 5.9.1-rt20 SMP PREEMPT_RT |

| Architecture | x86-64 |

Tests

The first time we detected this problem was when we were measuring the time it takes to execute this "complex" program with a single thread. We did a few tests with this program but also with a simpler one:

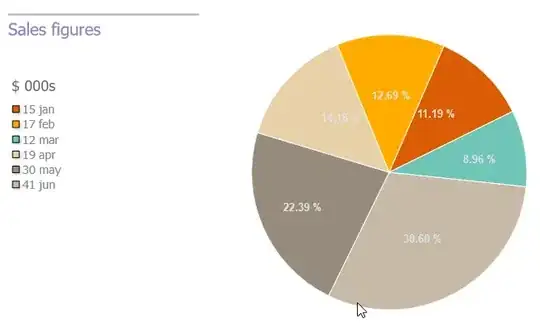

- The CPU execution times

- The wall time (the world real-time)

- The difference (Wall time - CPU time) between them and the ratio (CPU time / Wall time).

We also did a latency test on the PC.

Latency Test

For this one, we followed this tutorial, and these are the results:

- Latency Test Generic Kernel

- Latency Test RT Kernel

The processes are shown in htop with a priority of RT

Test Program - Complex

We called the function multiple times in the program and measured the time each takes. The results of the 2 tests are:

From this we observed that:

- The first execution (around 0.28 ms) always takes longer than the second one (around 0.18 ms), but most of the time it is not the longest iteration.

- The mode is around 0.17 ms.

- For those that take 17 ms the difference is usually 0 and the ratio 1. Although this is not exclusive to this time. For these, it seems like only 1 CPU is being used and it is saturated (there is no waiting time).

- When the difference is not 0, it is usually negative. This, from what we have read here and here, is because more than 1 CPU is being used.

Test Program - Simple

We did the same test but this time with a simpler program:

#include <vector>

#include <iostream>

#include <time.h>

int main(int argc, char** argv) {

int iterations = 5000;

double a = 5.5;

double b = 5.5;

double c = 4.5;

std::vector<double> wallTime(iterations, 0);

std::vector<double> cpuTime(iterations, 0);

struct timespec beginWallTime, endWallTime, beginCPUTime, endCPUTime;

std::cout << "Iteration | WallTime | cpuTime" << std::endl;

for (unsigned int i = 0; i < iterations; i++) {

// Start measuring time

clock_gettime(CLOCK_REALTIME, &beginWallTime);

clock_gettime(CLOCK_PROCESS_CPUTIME_ID, &beginCPUTime);

// Function

a = b + c + i;

// Stop measuring time and calculate the elapsed time

clock_gettime(CLOCK_REALTIME, &endWallTime);

clock_gettime(CLOCK_PROCESS_CPUTIME_ID, &endCPUTime);

wallTime[i] = (endWallTime.tv_sec - beginWallTime.tv_sec) + (endWallTime.tv_nsec - beginWallTime.tv_nsec)*1e-9;

cpuTime[i] = (endCPUTime.tv_sec - beginCPUTime.tv_sec) + (endCPUTime.tv_nsec - beginCPUTime.tv_nsec)*1e-9;

std::cout << i << " | " << wallTime[i] << " | " << cpuTime[i] << std::endl;

}

return 0;

}

Final Thoughts

We understand that:

- If the ratio == number of CPUs used, they are saturated and there is no waiting time.

- If the ratio < number of CPUs used, it means that there is some waiting time (theoretically we should only be using 1 CPU, although in practice we use more).

Of course, we can give more details.

Thanks a lot for your help!