I have two images, let's call them image 1 and image 2. I can use the following to select a random feature in image 1 and display it:

segall_prev = cv2.imread(str(Path(seg_seg_output_path, prev_filename)), 0);

segall_prev = np.uint8(segall_prev)

ret, thresh = cv2.threshold(segall_prev, 127, 255, 0)

numLabels, labelImage, stats, centroids = cv2.connectedComponentsWithStats(thresh, 8)

# Pick random prev-seg feature

random_prev_seg = random.randint(0, np.amax(labelImage))

i = np.unique(labelImage)[random_prev_seg]

pixels = np.argwhere(labelImage == random_prev_seg)

labelMask = np.zeros(thresh.shape, dtype="uint8")

labelMask[labelImage == i] = 1

numPixels = cv2.countNonZero(labelMask)

# Display chosen feature from prev_seg image only

fig_segall_prev = plt.figure()

fig_segall_prev_ = plt.imshow(labelMask)

plt.title(prev_filename + ' prev_seg')

Which will display an image such as:

So the idea is that that is the feature from the previous frame (image 1), and then the user will select where that feature is in the next frame (image 2) - basically tracing the object across frames.

# Display seg image and allow click

seg_ret, seg_thresh = cv2.threshold(segall, 127, 255, 0)

seg_numLabels, seg_labelImage, seg_stats, seg_centroids = cv2.connectedComponentsWithStats(seg_thresh, 8)

mutable_object = {}

def onclick(event):

# Capture click pixel location

X_coordinate = int(event.xdata)

Y_coordinate = int(event.ydata)

mutable_object['click'] = X_coordinate

print('x= ' + str(X_coordinate))

print('y= ' + str(Y_coordinate))

# Compare captured location with feature locations

x = np.where(seg_labelImage == 1)

#print(x[0])

if X_coordinate in x[0] and Y_coordinate in x[1]:

print('yes')

fig_segall = plt.figure()

cid = fig_segall.canvas.mpl_connect('button_press_event', onclick)

fig_segall_ = plt.imshow(seg_labelImage)

plt.title(filename + ' seg')

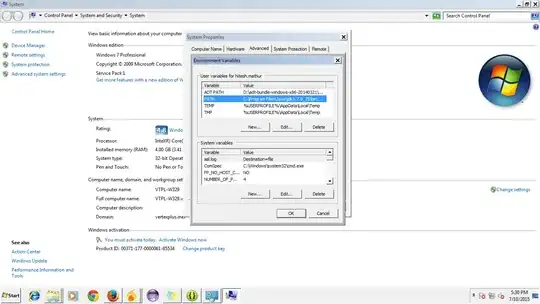

When image 2 is shown it is as follows:

So my question is, how do I go about capturing the location where the user clicks in the second image and then check whether those x-y coordinates correspond to where a feature is as found by cv2.connectedComponentsWithStats? I then need to save just that feature as a separate image and then use the location of the selected feature for use in the corresponding colour image.

If anyone can help, or suggest ways to improve the code - because it is very messy as I've been trying to figure this out for hours now... then that would be much appreciated. Thanks!

The solution (thanks to asdf):

seg_numLabels, seg_labelImage, seg_stats, seg_centroids = cv2.connectedComponentsWithStats(seg_thresh, 8)

selected_features = {}

def onclick(event):

# Capture click pixel location

X_coordinate = int(event.xdata)

Y_coordinate = int(event.ydata)

print(f'{X_coordinate=}, {Y_coordinate=}')

obj_at_click = seg_labelImage[Y_coordinate, X_coordinate] # component label at x/y, 0 is background, above are components

if obj_at_click != 0: # not background

# clicked on a feature

selected_feature = np.unique(seg_labelImage) == obj_at_click # get only the selected feature

selected_features[filename] = selected_feature

print(f'Saved feature number {obj_at_click}')

else:

print('Background clicked')

# Display seg image

fig_segall = plt.figure()

fig_segall.canvas.mpl_connect('button_press_event', onclick)

plt.imshow(segall)

plt.title(filename + ' seg')