I run a bare-metal Kubernetes cluster on 7 Raspberry Pi 4b with 8GB each.

I also installed Flannel for the communication between the nodes, MetalLB to have an external Load Balancer, and Istio to handle the incoming traffic.

To test my setup I run a small Rust application that immediately responds to each request with an HTTP 200. This application runs in the ping pod.

This is the yaml to install the pod and everything related to it:

apiVersion: apps/v1

kind: Deployment

metadata:

name: ping-deployment

labels:

app: ping

spec:

replicas: 2

template:

metadata:

name: ping

labels:

app: ping

version: 0.0.4

spec:

tolerations:

- key: "node.kubernetes.io/unreachable"

operator: "Exists"

effect: "NoExecute"

tolerationSeconds: 30

- key: "node.kubernetes.io/not-ready"

operator: "Exists"

effect: "NoExecute"

tolerationSeconds: 30

containers:

- name: ping

image: dasralph/ping:arm64_0.0.4

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

restartPolicy: Always

selector:

matchLabels:

app: ping

---

apiVersion: v1

kind: Service

metadata:

name: ping-service

spec:

selector:

app: ping

ports:

- port: 8080

name: http

---

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: ping-gateway

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "*"

---

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: ping

spec:

hosts:

- "*"

gateways:

- ping-gateway

http:

- match:

- uri:

exact: /ping

route:

- destination:

host: ping-service

port:

number: 8080

The cluster now looks like this:

$ kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

default ping-deployment-5f76577d6-rrrpf 2/2 Running 2 (10h ago) 10h

default ping-deployment-5f76577d6-xs8td 2/2 Running 0 79m

default prometheus-kube-prometheus-admission-create-b78k8 1/2 NotReady 2 79m

istio-system grafana-78588947bf-fckfh 1/1 Running 0 9h

istio-system istio-egressgateway-5ff98855b6-nvd75 1/1 Running 1 (10h ago) 12h

istio-system istio-ingressgateway-8695fcdd7b-58576 1/1 Running 0 10h

istio-system istiod-866c945f4d-dqhzf 1/1 Running 0 9h

istio-system jaeger-b5874fcc6-s4t6l 1/1 Running 0 10h

istio-system kiali-575cc8cbf-r2xd8 1/1 Running 1 (10h ago) 10h

istio-system prometheus-6544454f65-479t6 2/2 Running 0 9h

kube-system coredns-6d4b75cb6d-99lr4 1/1 Running 5 (10h ago) 5d8h

kube-system coredns-6d4b75cb6d-tmjcx 1/1 Running 5 (10h ago) 5d8h

kube-system etcd-raspi1 1/1 Running 6 (9h ago) 5d8h

kube-system kube-apiserver-raspi1 1/1 Running 5 (9h ago) 5d8h

kube-system kube-controller-manager-raspi1 1/1 Running 5 (9h ago) 5d8h

kube-system kube-flannel-ds-29mdq 1/1 Running 5 (10h ago) 5d8h

kube-system kube-flannel-ds-68b2x 1/1 Running 5 (10h ago) 5d8h

kube-system kube-flannel-ds-ls8zl 1/1 Running 5 (10h ago) 5d8h

kube-system kube-flannel-ds-lz4mw 1/1 Running 5 (10h ago) 5d8h

kube-system kube-flannel-ds-vx8kp 1/1 Running 6 (10h ago) 5d8h

kube-system kube-flannel-ds-xgv9s 1/1 Running 7 (10h ago) 5d8h

kube-system kube-flannel-ds-z28gq 1/1 Running 5 (9h ago) 5d8h

kube-system kube-proxy-2p77z 1/1 Running 5 (9h ago) 5d8h

kube-system kube-proxy-6xpkc 1/1 Running 5 (10h ago) 5d8h

kube-system kube-proxy-7ckpf 1/1 Running 5 (10h ago) 5d8h

kube-system kube-proxy-8glss 1/1 Running 5 (10h ago) 5d8h

kube-system kube-proxy-g2gnj 1/1 Running 5 (10h ago) 5d8h

kube-system kube-proxy-kqksp 1/1 Running 6 (10h ago) 5d8h

kube-system kube-proxy-qbl5v 1/1 Running 5 (10h ago) 5d8h

kube-system kube-scheduler-raspi1 1/1 Running 5 (9h ago) 5d8h

metallb-system controller-7476b58756-gzvxr 1/1 Running 2 (10h ago) 39h

metallb-system speaker-5kgh6 1/1 Running 2 (10h ago) 39h

metallb-system speaker-6ft5z 1/1 Running 2 (10h ago) 39h

metallb-system speaker-cl8n8 1/1 Running 2 (10h ago) 39h

metallb-system speaker-lr6sw 1/1 Running 2 (10h ago) 39h

metallb-system speaker-v27tm 1/1 Running 2 (10h ago) 39h

metallb-system speaker-z5nbl 1/1 Running 2 (10h ago) 39h

$ kubectl get services --all-namespaces

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 5d8h

default ping-service ClusterIP 10.97.131.254 <none> 8080/TCP 11h

istio-system grafana ClusterIP 10.98.194.129 <none> 3000/TCP 10h

istio-system istio-egressgateway ClusterIP 10.108.47.254 <none> 80/TCP,443/TCP 12h

istio-system istio-ingressgateway LoadBalancer 10.104.219.165 10.0.0.3 15021:31687/TCP,80:31856/TCP,443:32489/TCP,31400:30287/TCP,15443:30617/TCP 12h

istio-system istiod ClusterIP 10.107.79.224 <none> 15010/TCP,15012/TCP,443/TCP,15014/TCP 12h

istio-system jaeger-collector ClusterIP 10.100.10.165 <none> 14268/TCP,14250/TCP,9411/TCP 10h

istio-system kiali LoadBalancer 10.108.177.140 10.0.0.4 20001:32207/TCP,9090:31519/TCP 10h

istio-system prometheus ClusterIP 10.110.13.41 <none> 9090/TCP 10h

istio-system tracing ClusterIP 10.101.194.169 <none> 80/TCP,16685/TCP 10h

istio-system zipkin ClusterIP 10.108.22.152 <none> 9411/TCP 10h

kube-system kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 5d8h

kube-system prometheus-kube-prometheus-kubelet ClusterIP None <none> 10250/TCP,10255/TCP,4194/TCP 11h

$ istioctl analyze

✔ No validation issues found when analyzing namespace: default.

After everything is set up, I did some tests and found out, that the ping is much slower when I access it via Istio.

Benchmark ping with 2 pods via ping-service:

$ wrk -t12 -c400 -d30s --latency http://10.97.131.254:8080/ping

Running 30s test @ http://10.97.131.254:8080/ping

12 threads and 400 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 135.59ms 21.80ms 282.44ms 69.58%

Req/Sec 244.19 40.80 353.00 70.31%

Latency Distribution

50% 133.20ms

75% 151.27ms

90% 160.07ms

99% 205.07ms

87440 requests in 30.07s, 19.85MB read

Requests/sec: 2908.20

Transfer/sec: 675.99KB

Benchmark ping with 2 pods via istio-ingressgateway:

$ wrk -t12 -c400 -d30s --latency http://10.0.0.3/ping

Running 30s test @ http://10.0.0.3/ping

12 threads and 400 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 410.99ms 159.21ms 1.85s 78.58%

Req/Sec 82.24 37.46 252.00 65.66%

Latency Distribution

50% 387.92ms

75% 475.87ms

90% 578.71ms

99% 1.05s

29030 requests in 30.08s, 4.51MB read

Requests/sec: 965.25

Transfer/sec: 153.61KB

With Istio, I get only a third of the throughput when I access it directly. But it gets even worse.

Benchmark ping with 6 pods via ping-service:

$ wrk -t12 -c400 -d30s --latency http://10.97.131.254:8080/ping

Running 30s test @ http://10.97.131.254:8080/ping

12 threads and 400 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 56.22ms 25.90ms 447.45ms 90.03%

Req/Sec 607.64 62.02 1.78k 73.58%

Latency Distribution

50% 49.45ms

75% 60.03ms

90% 79.33ms

99% 168.56ms

218021 requests in 30.10s, 49.49MB read

Requests/sec: 7243.38

Transfer/sec: 1.64MB

Benchmark ping with 6 pods via istio-ingressgateway:

$ wrk -t12 -c400 -d30s --latency http://10.0.0.3/ping

Running 30s test @ http://10.0.0.3/ping

12 threads and 400 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 470.44ms 204.97ms 1.88s 86.25%

Req/Sec 74.85 34.41 191.00 60.75%

Latency Distribution

50% 436.67ms

75% 528.83ms

90% 638.73ms

99% 1.50s

25720 requests in 30.07s, 4.00MB read

Requests/sec: 855.41

Transfer/sec: 136.15KB

Q1: Why is the istio-ingressgateway much slower than the ping-service?

Q2: Why is the istio-ingressgateway slower with 6 pods? Shouldn't there be a performance boost?

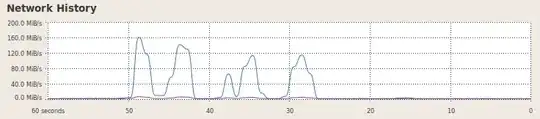

I also installed kiali to find the bottleneck but everything I found was this:

Edit: I found out that I get more details when I click one a bubble in the Traces view:

But now I have more questions.

Q3: Why do I have Spans with only 1 app involved and sometimes with two apps involved?

Q4: It looks that the istio-ingressgateway is the bottleneck but how can I fix it?