I am trying to connect to a postgreQSL-database initialized within a Dockerized Django project. I am currently using the python package psycopg2 inside a Notebook in Jupyter to connect and add/manipulate data inside the db.

With the code:

connector = psycopg2 .connect(

database="postgres",

user="postgres",

password="postgres",

host="postgres",

port="5432")

It raises the following error:

OperationalError: could not translate host name "postgres" to address: Unknown host

Meanwhile, It connects correctly to the local db named postgres with host as localhost or 127.0.0.1, but it is not the db I want to access. How can I connect from Python to the db? Should I change something in the project setup?

You can find the Github repository here. Many thanks!

docker-compose.yml:

version: '3.8'

services:

web:

restart: always

build: ./web

expose:

- "8000"

links:

- postgres:postgres

- redis:redis

volumes:

- web-django:/usr/src/app

- web-static:/usr/src/app/static

env_file: .env

environment:

DEBUG: 'true'

command: /usr/local/bin/gunicorn docker_django.wsgi:application -w 2 -b :8000

nginx:

restart: always

build: ./nginx/

ports:

- "80:80"

volumes:

- web-static:/www/static

links:

- web:web

postgres:

restart: always

image: postgres:latest

hostname: postgres

ports:

- "5432:5432"

volumes:

- pgdata:/var/lib/postgresql/data/

environment:

POSTGRES_DB: postgres

POSTGRES_USER: postgres

POSTGRES_PASSWORD: postgres

pgadmin:

image: dpage/pgadmin4

depends_on:

- postgres

ports:

- "5050:80"

environment:

PGADMIN_DEFAULT_EMAIL: pgadmin4@pgadmin.org

PGADMIN_DEFAULT_PASSWORD: admin

restart: unless-stopped

redis:

restart: always

image: redis:latest

ports:

- "6379:6379"

volumes:

- redisdata:/data

volumes:

web-django:

web-static:

pgdata:

redisdata:

Dockefile:

FROM python:3.7-slim

RUN python -m pip install --upgrade pip

COPY requirements.txt requirements.txt

RUN python -m pip install -r requirements.txt

COPY . .

Edit

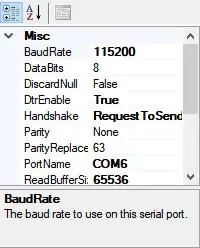

To verify that localhost is not the correct hostname I tried to visualize the tables inside PgAdmin (which connects to the correct host), and psycopg2: