I have a 37 GB .npy file that I would like to convert to Zarr store so that I can include coordinate labels. I have code that does this in theory, but I keep running out of memory. I want to use Dask in-between to facilitate doing this in chunks, but I still keep running out of memory.

The data is "thickness maps" for people's femoral cartilage. Each map is a 310x310 float array, and there are 47789 of these maps. So the data shape is (47789, 310, 310).

Step 1: Load the npy file as a memmapped Dask array.

fem_dask = dask.array.from_array(np.load('/Volumes/T7/cartilagenpy20220602/femoral.npy', mmap_mode='r'),

chunks=(300, -1, -1))

Step 2: Make an xarray DataArray over the Dask array, with the desired coordinates. I have several coordinates for the 'map' dimension that come from metadata (a pandas dataframe).

fem_xr = xr.DataArray(fem_dask, dims=['map','x','y'],

coords={'patient_id': ('map', metadata['patient_id']),

'side': ('map', metadata['side'].astype(np.string_)),

'timepoint': ('map', metadata['timepoint'])

})

Step 3: Write to Zarr.

fem_ds = fem_xr.to_dataset(name='femoral') # Zarr requires Dataset, not DataArray

res = fem_ds.to_zarr('/Volumes/T7/femoral.zarr',

encoding={'femoral': {'dtype': 'float32'}},

compute=False)

res.visualize()

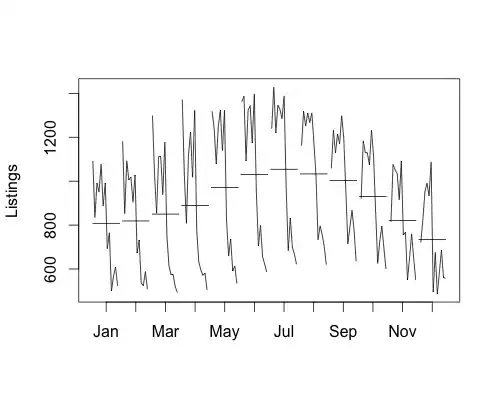

See task graph below if desired

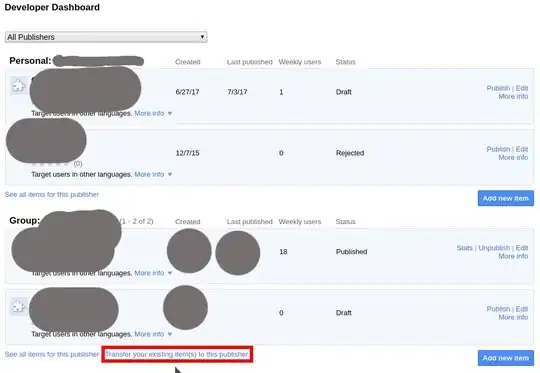

When I call res.compute(), RAM use quickly climbs out of control. The other python processes, which I think are the Dask workers, seem to be inactive:

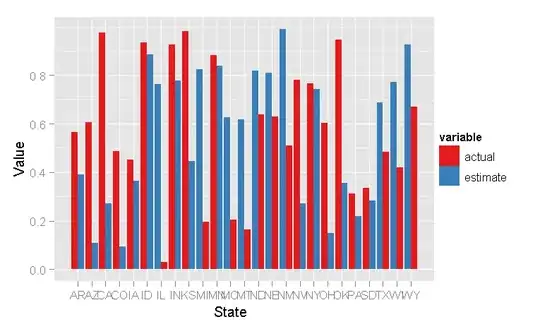

But a bit later, they are active -- see that one of those Python processes now has 20 gb RAM and another has 36 gb:

Which we can also confirm from the Dask dashboard:

Eventually all the workers get killed and the task errors out. How can I do this in an efficient way that correctly uses Dask, xarray, and Zarr, without running out of RAM (or melting the laptop)?