I am using the tempdisagg R package for benchmarking quarterly time series to annual time series from different (more trusted) sources (by temporally disaggragating the annual data using the quarterly data as indicator series).

The time series are sub series and sum series, and these identities should hold after benchmarking, too. I.e. if

S = A + B - C,

then

predict(td(S,...)) = predict(td(A, ...)) + predict(td(B, ...)) - predict(td(C,...)).

I have tried the Denton-Cholette and the Chow-Lin-maxlog methods.

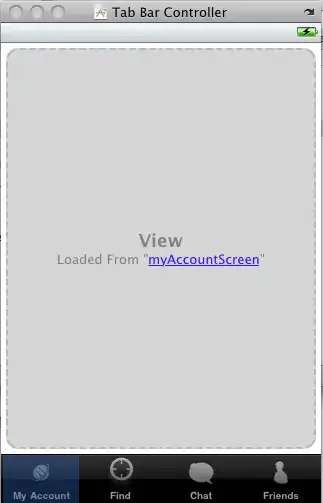

This is to be carried out regularly, so ideally I would like a disaggregation method that minimises revisions. I have tried removing up to ten years' worth a data from various time series to see if any method outperforms the other in terms of minimising revisions, but it seems that it depends on a combination of time series volatility and method and I can't reach a conclusion.

It would be possible to use a combination of different methods on the sub series, I guess.

Is there any comprehensive knowledge on benchhmarking and revisions?

I have attached some graphs in an attempt to illustrate the problem. Ideally, we would like to see one line that just changes colour according to the various years of data, as in the first two graphs until about 2015. The black lines in the graphs are the raw data.