Intro:

You chose the wrong OpenCV function to do your job. Adaptive threshold divides the image into blocks of predefined sizes. Threshold operation is carried out in each of these individual blocks, independent of each other.

What you need is OpenCV's global threshold function: cv2.threshold, which allows you to set a single threshold value for the entire image.

Go through this documentation page for more details.

Why won't Adaptive Threshold work?

As mentioned earlier, threshold is calculated based on pixel intensities within a predefined region of the image. This is done for each region, independently.

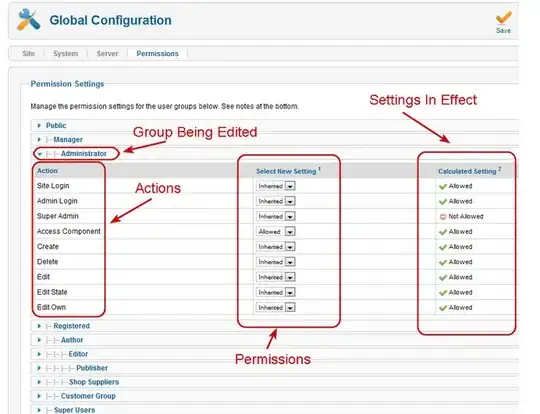

Let's take a sample image below:

Performing adaptive threshold (from your code) with kernel size 5 and constant 3 gives the following:

adapt_thresh = cv2.adaptiveThreshold(binary_img, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY_INV, 5, 3)

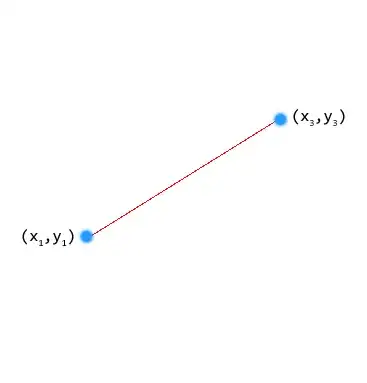

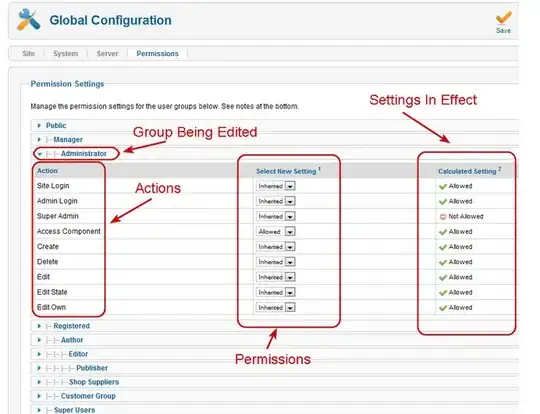

To understand what happens here, have a look at this illustration:

A square kernel of some size is used (black) on an image with 2 shades of gray. The above represents 3 different scenarios for 3 different kernel positions. When the kernel is positioned on either of the 2 shaded regions, the threshold value is based on all the pixel intensities within the kernel region which are all the same, hence no change is reflected in the result. In the third scenario, when the kernel is along the edge of the 2 regions, threshold value is obtained based on Gaussian weight for each pixel in that region. Pixels higher than the threshold are made white (255), and those below are made black (0).

In order for you to continue working with adaptive threshold, you need to use a kernel size that closely resemble the size of the blobs in the image. The constant 'C' is also a parameter you would have to tune.

What would work?

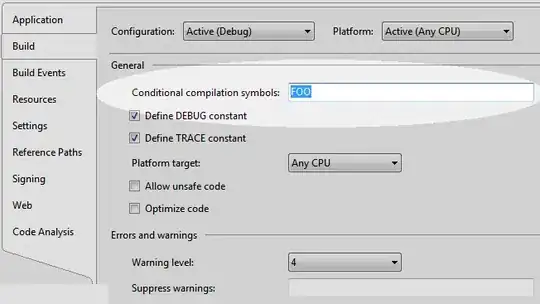

The following code shows global threshold function:

img =cv2.imread('blobs.png')

blur = cv2.GaussianBlur(img, (3, 3), 0)

# convert to LAB color space

lab = cv2.cvtColor(blur, cv2.COLOR_BGR2LAB)

# get luminance channel

l_component = lab[:, :, 0]

The blobs in the above image appear darker than other regions. Choosing a suitable threshold value below allows us to segment it:

# setting threshold level at 125

ret, thresh = cv2.threshold(l_component, 125, 255, cv2.THRESH_BINARY)

Attempts:

You can also try:

- Changing the kernel size used in blurring operation

- Use the grayscale image as input