Let's say I have a data matrix X with num_samples = 1600, dim_data = 2, from which I can build a 1600*1600 similarity matrix S using the rbf kernel. I can normalize each row of the matrix, by multiplying all entries of the row by (1 / sum(entries of the row)). This procedure gives me a (square) right stochastic matrix, which we expect to have an eigenvalue equal to 1 associated to a constant eigenvector full of 1s.

We can easily check that this is indeed an eigenvector by taking its product with the matrix. However, using scipy.linalg.eig the obtained eigenvector associated to eigenvalue 1 is only piecewise constant.

I have tried scipy.linalg.eig on similarly sized matrices with randomly generated data which I transformed into stochastic matrices and consistently obtained a constant eigenvector associated to eigenvalue 1.

My question is then, what factors may cause numerical instabilities when computing eigenvalues of stochastic matrices using scipy.linalg.eig?

Reproducible example:

def kernel(sigma,X):

"""

param sigma: variance

param X: (num_samples,data_dim)

"""

squared_norm = np.expand_dims(np.sum(X**2,axis=1),axis=1) + np.expand_dims(np.sum(X**2,axis=1),axis=0)-2*np.einsum('ni,mi->nm',X,X)

return np.exp(-0.5*squared_norm/sigma**2)

def normalize(array):

degrees = []

M = array.shape[0]

for i in range(M):

norm = sum(array[i,:])

degrees.append(norm)

degrees_matrix = np.diag(np.array(degrees))

P = np.matmul(np.linalg.inv(degrees_matrix),array)

return P

#generate the data

points = np.linspace(0,4*np.pi,1600)

Z = np.zeros((1600,2))

Z[0:800,:] = np.array([2.2*np.cos(points[0:800]),2.2*np.sin(points[0:800])]).T

Z[800:,:] = np.array([4*np.cos(points[0:800]),4*np.sin(points[0:800])]).T

X = np.zeros((1600,2))

X[:,0] = np.where(Z[:,1] >= 0, Z[:,0] + .8 + params[1], Z[:,0] - .8 + params[2])

X[:,1] = Z[:,1] + params[0]

#create the stochastic matrix P

P = normalize(kernel(.05,X))

#inspect the eigenvectors

e,v = scipy.linalg.eig(P)

p = np.flip(np.argsort(e))

e = e[p]

v = v[:,p]

plot_array(v[:,0])

#check on synthetic data:

Y = np.random.normal(size=(1600,2))

P = normalize(kernel(Y))

#inspect the eigenvectors

e,v = scipy.linalg.eig(P)

p = np.flip(np.argsort(e))

e = e[p]

v = v[:,p]

plot_array(v[:,0])

Using the code provided by Ahmed AEK, here are some results on the divergence of the obtained eigenvector from the constant eigenvector.

[-1.36116641e-05 -1.36116641e-05 -1.36116641e-05 ... 5.44472888e-06

5.44472888e-06 5.44472888e-06]

norm = 0.9999999999999999

max difference = 0.04986484253966891

max difference / element value -3663.3906291852545

UPDATE:

I have observed that a low value of sigma in the construction of the kernel matrix produces a less sharp decay in the (sorted) eigenvalues. In fact, for sigma=0.05, the first 4 eigenvalues produced by scipy.linalg.eig are rounded up to 1. This may be linked to the imprecision in the eigenvectors. When sigma is increased to 0.5, I do obtain a constant eigenvector.

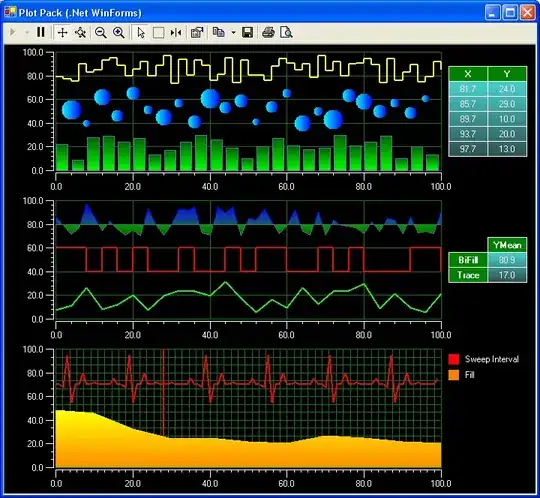

First 5 eigenvectors in the sigma=0.05 case

First 5 eigenvectors in the sigma=0.5 case