I am trying to use puppeteer to extract the innerHTML value from a button on a webpage. For now, I am simply trying to await the appearance of the selector to allow me to then work with it.

On running the below code the program times out waiting.

const puppeteer = require("puppeteer");

const link =

"https://etherscan.io/tx/0xb06c7d09611cb234bfcd8ccf5bcd7f54c062bee9ca5d262cc5d8f3c4c923bd32";

async function configureBrowser() {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto(link);

return page;

}

async function findFee(page) {

await page.reload({ waitUntil: ["networkidle0", "domcontentloaded"] });

await page.waitForSelector("#txfeebutton");

console.log("boom");

}

const setup = async () => {

const page = await configureBrowser();

await findFee(page);

await browser.close();

};

setup();

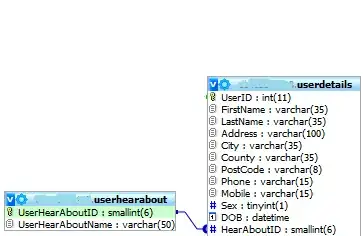

As you can see below, the element definitely exists in the HTML:

Console output: