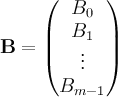

I'm trying to write a python pipeline in Databricks to take CDC data from a postgres, dumped by DMS into s3 as parquet files and ingest it. The file names are numerically ascending unique ids based on datatime (ie 20220630-215325970.csv). Right now autoloader seems to fetch all files at the source in random order. This means that updates to rows in DB may not happen in the correct order.

Here is a screenshot with an example. Update, 1, 2, and 3 were entered sequentially after all other displayed records but they appear in the df in the order below (column 4).

I've tried using the latestFirst option to see if I can get the files processed in a predictable order but that option doesn't seem to have any effect.

Here is my code:

dfp = (spark

.readStream

.format("cloudFiles")

.option("cloudfiles.format","parquet")

.option("latestFirst", 'false') # this doesn't do anything

.schema(schema)

.load(filePath))

display(dfp)

Is there a way to load and write files in order by filename or date using autoloader?