I'm writing some code to bring back a unique ID for each event that comes in in a given version. The value can repeat in a future version as the prefix for the version will change. I have the version information but I'm struggling to bring back the uid. I found some code that seems to produce what I need, found here and have to implement it for what I want but I am facing an issue.

I have the information I need as a dataframe and when I run the code it returns all values as the same unique value. I suspect that the issue stems from how I am using the used set from the example and it isn't being properly stored hence why it returns the same info each time.

Is anyone able to provide some hint on where to look as I can't seem to work out how to persist the information to change it for each row. Side note, I can't use Pandas so I can't use the udf function in there and the uuid module is no good as the requirement is to keep it short to allow easy human typing for searching. I've posted the code below.

import itertools

import string

from pyspark.sql.functions import udf

from pyspark.sql.types import StringType

@udf(returnType=StringType())

def uid_generator(id_column):

valid_chars = set(string.ascii_lowercase + string.digits) - set('lio01')

used = set()

unique_id_generator = itertools.combinations(valid_chars, 6)

uid = "".join(next(unique_id_generator)).upper()

while uid in used:

uid = "".join(next(unique_id_generator))

return uid

used.add(uid)

#uuid_udf = udf(uuid_generator,)

df2 = df_uid_register_input.withColumn('uid', uid_generator(df_uid_register_input.record))

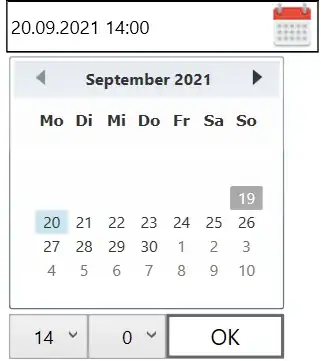

The output is: