Does an Azure VM throttle SignalR messages being sent? Running locally, the client receives every message, but when hosted on the VM, clients only receive messages 30% of the time!?!?

This question is about Microsoft.AspNet.SignalR nuget package for SignalR in .Net Core 3.1 API back end, with a VueJS SPA front-end, all being hosted on an Azure Windows Server 2016 VM using IIS 10.

On my local machine, SignalR works perfectly. Messages get sent/received all the time, instantaneously. Then I publish to the VM, and when (IF) the WebSocket connection is successful, the client can only receive messages for the first 5 or so seconds at most, then stops receiving messages.

I've set up a dummy page that sends a message to my API, which then sends said message back down to all connections. It's a simple input form and "Send" button. After the few seconds of submitting and receiving messages, I need to rapidly submit (even hold down the "enter" button to submit) the form and send what should be a constant stream of messages back, until, low and behold, several seconds later messages begin to be received again, but only for a few seconds.

I've actually held down the submit button for constant stream and timed how long it takes to start getting messages, then again how long it takes to stop receiving. My small sample shows ~30 messages get received, then skips (does not receive) the next ~70 messages until another ~30 messages come in .. then the pattern persists, no messages for several seconds, then (~30) messages for a few seconds.

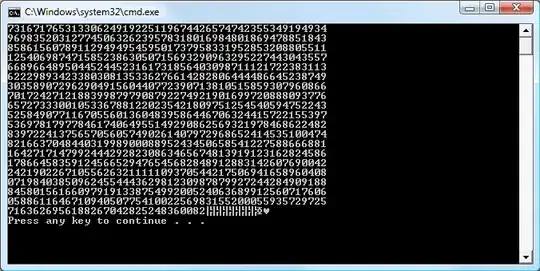

Production Environment Continuously Sending 1000 messages:

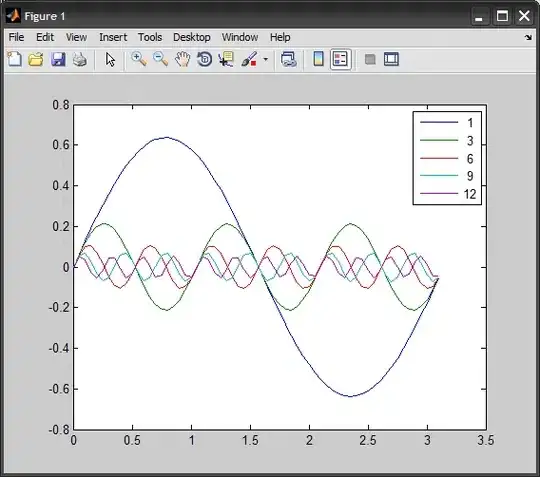

Same Test in Local Environment Sending 1000 messages:

If I stop the test, not matter how long I wait, when I hold the enter button (repeated submit), it takes a few seconds to get back into the 3 second/2 second pattern. It's almost as if I need to keep pressuring the server to send the message back to the client, otherwise the server gets lazy and doesn't do any work at all. If I slow play the message submits, it's rare that the client receives any messages at all. I really need to persistently and quickly send messages in order to start receiving them again.

FYI, during the time that I am holding down submit, or rapidly submitting, I receive no errors for API calls (initiating messages) and no errors for Socket Connection or receiving messages. All the while, when client side SignalR log level is set to Trace, I see ping requests being sent and received successfully every 10 seconds.

Here is the Socket Config in .Net:

services.AddSignalR()

.AddHubOptions<StreamHub>(hubOptions => {

hubOptions.EnableDetailedErrors = true;

hubOptions.ClientTimeoutInterval = TimeSpan.FromHours(24);

hubOptions.HandshakeTimeout = TimeSpan.FromHours(24);

hubOptions.KeepAliveInterval = TimeSpan.FromSeconds(15);

hubOptions.MaximumReceiveMessageSize = 1000000;

})

.AddJsonProtocol(options =>

{

options.PayloadSerializerOptions.PropertyNamingPolicy = null;

});

// Adding Authentication

services.AddAuthentication(options =>

{

options.DefaultAuthenticateScheme = JwtBearerDefaults.AuthenticationScheme;

options.DefaultChallengeScheme = JwtBearerDefaults.AuthenticationScheme;

options.DefaultScheme = JwtBearerDefaults.AuthenticationScheme;

})

// Adding Jwt Bearer

.AddJwtBearer(options =>

{

options.SaveToken = true;

options.RequireHttpsMetadata = false;

options.TokenValidationParameters = new TokenValidationParameters()

{

ValidateIssuer = true,

ValidateAudience = true,

ValidAudience = Configuration["JWT:ValidAudience"],

ValidIssuer = Configuration["JWT:ValidIssuer"],

IssuerSigningKey = new SymmetricSecurityKey(Encoding.UTF8.GetBytes(Configuration["JWT:Secret"])),

ClockSkew = TimeSpan.Zero

};

// Sending the access token in the query string is required due to

// a limitation in Browser APIs. We restrict it to only calls to the

// SignalR hub in this code.

// See https://learn.microsoft.com/aspnet/core/signalr/security#access-token-logging

// for more information about security considerations when using

// the query string to transmit the access token.

options.Events = new JwtBearerEvents

{

OnMessageReceived = context =>

{

var accessToken = context.Request.Query["access_token"];

// If the request is for our hub...

var path = context.HttpContext.Request.Path;

if (!string.IsNullOrEmpty(accessToken) && (path.StartsWithSegments("/v1/stream")))

{

// Read the token out of the query string

context.Token = accessToken;

}

return Task.CompletedTask;

}

};

});

I use this endpoint to send back messages:

[HttpPost]

[Route("bitcoin")]

public async Task<IActionResult> SendBitcoin([FromBody] BitCoin bitcoin)

{

await this._hubContext.Clients.All.SendAsync("BitCoin", bitcoin.message);

return Ok(bitcoin.message);

}

Here is the Socket Connection in JS and the button click to call message API:

this.connection = new signalR.HubConnectionBuilder()

.configureLogging(process.env.NODE_ENV.toLowerCase() == 'development' ? signalR.LogLevel.None : signalR.LogLevel.None)

.withUrl(process.env.VUE_APP_STREAM_ROOT, { accessTokenFactory: () => this.$store.state.auth.token })

.withAutomaticReconnect({

nextRetryDelayInMilliseconds: retryContext => {

if(retryContext.retryReason && retryContext.retryReason.statusCode == 401) {

return null

}

else if (retryContext.elapsedMilliseconds < 3600000) {

// If we've been reconnecting for less than 60 minutes so far,

// wait between 0 and 10 seconds before the next reconnect attempt.

return Math.random() * 10000;

} else {

// If we've been reconnecting for more than 60 seconds so far, stop reconnecting.

return null;

}

}

})

.build()

// connection timeout of 10 minutes

this.connection.serverTimeoutInMilliseconds = 1000 * 60 * 10

this.connection.reconnectedCallbacks.push(() => {

let alert = {

show: true,

text: 'Data connection re-established!',

variant: 'success',

isConnected: true,

}

this.$store.commit(CONNECTION_ALERT, alert)

setTimeout(() => {

this.$_closeConnectionAlert()

}, this.$_appMessageTimeout)

// this.joinStreamGroup('event-'+this.event.eventId)

})

this.connection.onreconnecting((err) => {

if(!!err) {

console.log('reconnecting:')

this.startStream()

}

})

this.connection.start()

.then((response) => {

this.startStream()

})

.catch((err) => {

});

startStream() {

// ---------

// Call client methods from hub

// ---------

if(this.connection.connectionState.toLowerCase() == 'connected') {

this.connection.methods.bitcoin = []

this.connection.on("BitCoin", (data) => {

console.log('messageReceived:', data)

})

}

}

buttonClick() {

this.$_apiCall({url: 'bitcoin', method: 'POST', data: {message:this.message}})

.then(response => {

// console.log('message', response.data)

})

}

For the case when the Socket Connection fails:

On page refresh, sometimes the WebSocket Connection fails, but there are multiple calls to the Socket endpoint that are almost identical, where one returns 404 and another returns a 200 result

Failed Request

This is the request that failed, the only difference to the request that succeeded (below) is the content-length in the Response Headers (highlighted). The Request Headers are identical:

Successful request to Socket Endpoint

Identical Request Headers

What could be so different about the configuration on my local machine vs. the configuration on my Azure VM? Why would the client stop and start receiving messages like this? Might it be the configuration on my VM? Are the messages getting blocked somehow? I've exhausted myself trying to figure this out!!

Update: KeepAlive messages are being sent correctly, but the continuous stream of messages sent (expecting received) only works periodically.

Here we see that the KeepAlive messages are being sent and received every 15 seconds, as expected.