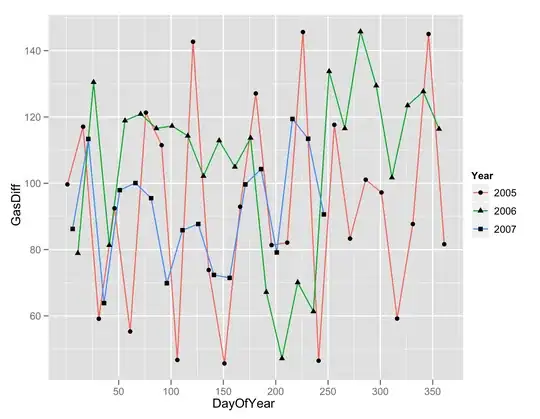

My goal is to be able to get the percentage of similarity between 2 images.

The point is that my definition of similarity is kind of special in this case.

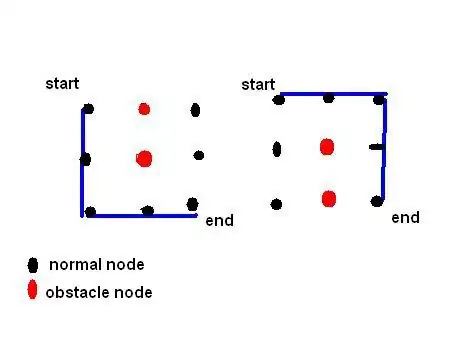

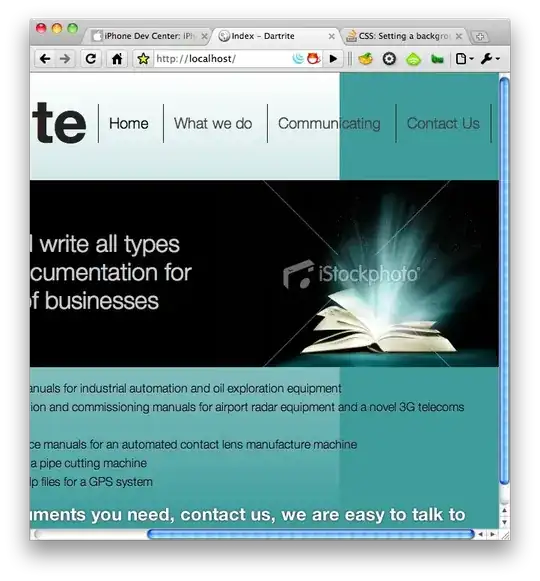

Here are some example of what I want to achieve :

Image A

is similar to

Image A bis

HOWEVER,

Image B

is not similar to Image A (or A bis) but is similar to

Image B bis

I have already tried to follow the methods described here : Checking images for similarity with OpenCV but it didn't work in my case... In fact a black background was more similar to Image A than Image A bis was to Image A.

PS : I also tried with colors but the results were the same :

Image A colored

Image A bis colored

Image B colored

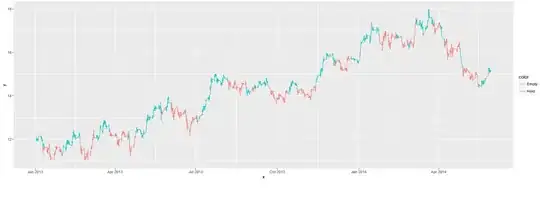

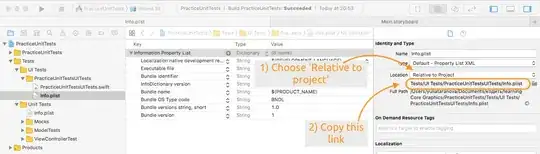

I did more research and someone told me that I could achieve what I wanted using FFT (fast Fourier transform) with openCV (which is what i use).

When applying FFT, this is what I get :

Image A FFT

Image A bis FFT

Image B FFT

Image B bis FFT

This leads me to these questions : is FFT really the way to go ? If yes, what can I try to do with my magnitude spectrums ? If no, is there another way to go that could solve my problem ?

PS : I would rather not use ML or deep learning id possible.

Thanks! (and sorry for the quantity of images)

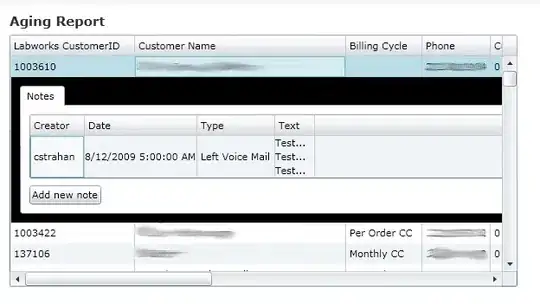

EDIT 1 :

your metric could be number of overlapping pixels divided with the logical and of the two images

Why I haven't done this so far : because sometimes, the form that you see in the examples could be on top of the image wheras the form in my example is at the bottom. Moreover, one form could be much smaller than the one in the example even though they are still the same.

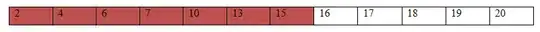

EDIT 2 :

I am looking for the local similarity. In fact, the size doesn't matter as long as the form itself is the same shape as the example. Could be much bigger, smaller, located on top, on bottom... It's all about the the shape of the form. However, for form must be in the same direction and rotation.

For instance, here are two images that must be classified as Image A :

EDIT 3 :

The pictures you see are 30 stacked frames of a hand motion. That's why in Images A* you see 2 blobs --> swipe from left to right and the AI doesn't detect the hand in the center. Because the swipe isn't perfect, that's why the "blobs" are not at the same height every time. Moreover, if the hand if closer to the camera you will get Image A, if it is further you will get Image A bis bis of EDIT 2 section.

EDIT 4 :

The problem with IoU as I tried to use it regarding @Christoph Rackwitz answer is that it doesn't work in the case of Image A and Image A smaller (see EDIT 2 images).