What is the best approach to this regression problem, in terms of performance as well as accuracy? Would feature importance be helpful in this scenario? And how do I process this large range of data?

Please note that I am not an expert on any of this, so I may have bad information or theories about why things/methods don't work.

The Data: Each item has an id and various attributes. Most items share the same attributes, however there are a few special items with items specific attributes. An example would look something like this:

item = {

"item_id": "AMETHYST_SWORD",

"tier_upgrades": 1, # (0-1)

"damage_upgrades": 15, # (0-15)

...

"stat_upgrades": 5 # (0-5)

}

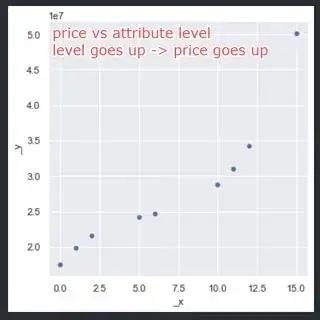

The relationship between any attribute and the value of the item is linear; if the level of an attribute is increased, so is the value, and vise versa. However, an upgrade at level 1 is not necessarily 1/2 of the value of an upgrade at level 2; the value added for each level increase is different. The value of each upgrade is not constant between items, nor is the price of the item without upgrades. All attributes are capped at a certain integer, however it is not constant for all attributes.

As an item gets higher levels of upgrades, they are also more likely to have other high level upgrades, which is why the price starts to have a steeper slope at upgrade level 10+.

Collected Data: I've collected a bunch of data on the prices of these items with various different combinations of these upgrades. Note that, there is never going to be every single combination of each upgrade, which is why I must implement some sort of prediction into this problem.

As far as the economy & pricing goes, high tier, low drop chance items that cannot be outright bought from a shop are going to be priced based on pure demand/supply. However, middle tier items that have a certain cost to unlock/buy will usually settle for a bit over the cost to acquire.

Some upgrades are binary (ranges from 0 to 1). As shown below, almost all points where tier_upgrades == 0 overlap with the bottom half of tier_upgrades == 1, which I think may cause problems for any type of regression.

Attempts made so far: I've tried linear regression, K-Nearest Neighbor search, and attemted to make a custom algorithm (more on that below).

Regression: It works, but with a high amount of error. Due to the nature of the data I'm working with, many of the features are either a 1 or 0 and/or overlap a lot. From my understanding, this creates a lot of noise in the model and degrades the accuracy of it. I'm also unsure of how well it would scale to multiple items, since each is valued independent of each other. Aside from that, in theory, regression should work because different attributes affect the value of an item linearly.

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error

from sklearn import linear_model

x = df.drop("id", axis=1).drop("adj_price", axis=1)

y = df.drop("id", axis=1)["adj_price"]

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.25, random_state=69)

regr = linear_model.LinearRegression()

regr.fit(x, y)

y_pred = regr.predict(x_test)

rmse = np.sqrt(mean_squared_error(y_test, y_pred))

mae = np.mean(np.absolute(y_pred - y_test))

print(f"RMSE: {rmse} MAE: {mae}")

K-Nearest Neighbors: This has also worked, but not all the time. Sometimes I run into issues where I don't have enough data for one item, which then forces it to choose a very different item, throwing off the value completely. In addition, there are some performance concerns here, as it is quite slow to generate an outcome. This example is written in JS, using the nearest-neighbor package. Note: The price is not included in the item object, however I add it when I collect data, as it is the price that gets paid for the item. The price is only used to find the value after the fact, it is not accounted for in the KNN search, which is why it is not in fields.

const nn = require("nearest-neighbor");

var items = [

{

item_id: "AMETHYST_SWORD",

tier_upgrades: 1,

damage_upgrades: 15,

stat_upgrades: 5,

price: 1800000

},

{

item_id: "AMETHYST_SWORD",

tier_upgrades: 0,

damage_upgrades: 0,

stat_upgrades: 0,

price: 1000000

},

{

item_id: "AMETHYST_SWORD",

tier_upgrades: 0,

damage_upgrades: 8,

stat_upgrades: 2,

price: 1400000

},

];

var query = {

item_id: "AMETHYST_SWORD",

tier_upgrades: 1,

damage_upgrades: 10,

stat_upgrades: 3

};

var fields = [

{ name: "item_id", measure: nn.comparisonMethods.word },

{ name: "tier_upgrades", measure: nn.comparisonMethods.number },

{ name: "damage_upgrades", measure: nn.comparisonMethods.number },

{ name: "stat_upgrades", measure: nn.comparisonMethods.number },

];

nn.findMostSimilar(query, items, fields, function(nearestNeighbor, probability) {

console.log(query);

console.log(nearestNeighbor);

console.log(probability);

});

Averaged distributions: Below is a box chart showing the distribution of prices for each level of damage_upgrades. This algorithm will find the average price where the attribute == item[attribute] for each attribute, and then find the mean. This is a relatively fast way to calculate the value, much faster than using a KNN. However, there is often too big of a spread in a given distribution, which increases the error. Another problem with this is if there is not an equal(ish) distribution of items in each set, it also increases the error. However, the main problem is that items with max upgrades except for a few will be placed in the same set, further disrupting the average, because there is a spread in the value of items. An example:

low_value = {

item_id: "AMETHYST_SWORD",

tier_upgrades: 0,

damage_upgrades: 1,

stat_upgrades: 0,

price: 1_100_000

}

# May be placed in the same set as a high value item:

high_value = {

item_id: "AMETHYST_SWORD",

tier_upgrades: 0,

damage_upgrades: 15,

stat_upgrades: 5,

price: 1_700_000

}

# This spread in each set is responsible for any inaccuracies in the prediction, because the algorithm does not take into account any other attributes/upgrades.

Here is the Python code for this algorithm. df is a regular dataframe with the item_id, price, and the attributes.

total = 0

features = {

'tier_upgrades': 1,

'damage_upgrades': 15,

'stat_upgrades': 5,

}

for f in features:

a = df[df[f] == features[f]]

avg_price = np.mean(a["adj_price"])

total += avg_price

print("Estimated value:", total / len(features))

If anyone has any ideas, please, let me know!