So I'm trying to scrape the HTML of a website.

private static async Task GetResponseMessageAsync(string filledInUrl, List<HttpResponseMessage> responseMessages)

{

Console.WriteLine("Started GetResponseMessageAsync for url " + filledInUrl);

var httpResponseMessage = await _httpClient.GetAsync(filledInUrl);

await EnsureSuccessStatusCode(httpResponseMessage);

responseMessages.Add(httpResponseMessage);

Console.WriteLine("Finished GetResponseMessageAsync for url " + filledInUrl);

}

The url I give is this https://www.rtlnieuws.nl/zoeken?q=philips+fraude

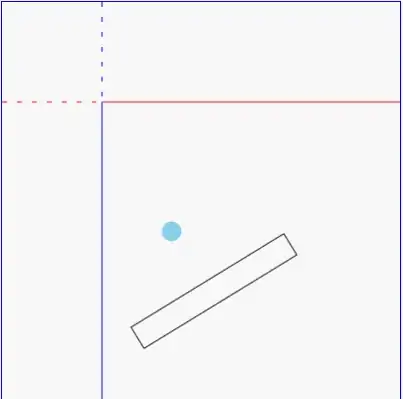

When I right-click -> inspect on that page in the browser I see this:

A normal HTML that I can use Xpath to search through.

BUT. When I actually print out what my ResponseMessage contains...

var htmlDocument = new HtmlDocument(); // this will collect all the search results for a given keyword into Nodes

var scrapedHtml = await httpResponseMessage.Content.ReadAsStringAsync();

Console.WriteLine(scrapedHtml);

It's a different HTML. Basically it seems like the HTML that the server sends and the one I see in the browser are different. And I can't use my Xpaths to process the response anymore.

I know that my scraper generally works because when I used it on another website where the "server-HTML" and "browser-HTML" were the same it worked.

I wonder what I could do now to translate the "server-HTML" into "browser-HTML"? How does it work? Is there something in the HTMLAgilityPack I could use? I couldn't find anything online probably because "server-HTML" and "browser-HTML" are not the correct terms.

Will be grateful for your help.