For a deep learning project, I need to synthesize plots for each item in my dataset. This means generating 2.5 million plots, each 224x224 pixels.

So far the best I've been able to do is this, which takes 2.7 seconds to run on my PC:

from matplotlib.backends.backend_agg import FigureCanvasAgg as FigureCanvas

import matplotlib.pyplot as plt

for i in range(100):

fig = plt.Figure(frameon=False, facecolor="white", figsize=(4, 4))

ax = fig.add_subplot(111)

ax.axis('off')

ax.plot([1, 2, 3, 4, 5, 6, 7, 8], [2, 4, 6, 8, 8, 6, 4, 3])

canvas = FigureCanvas(fig)

canvas.print_figure(str(i), dpi=56)

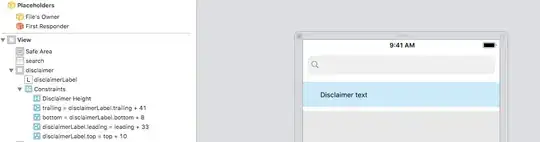

A resulting image (from this reproducible example) looks like this:

The real images use a bit more data (200 rows) but that makes little difference to speed.

At the speed above it will take me around 18 hours to generate all my plots! Are there any clever ways to speed this up?