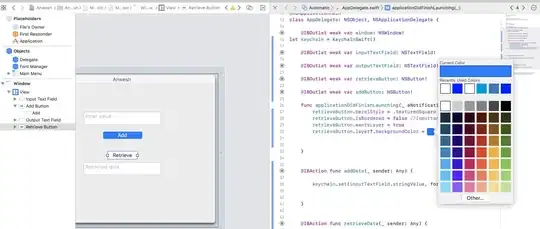

I have a parquet file in my disk. I am reading the disk using spark. All I wanted is the data to be in the readable and understandable form. But due to more number of columns the columns are shifted downward and the data seems like they are shuffled and its hard to read the data. My expectation is when data are displayed:

But in my case I am using:

df2=spark.read.parquet("D:\\source\\202204121921-seller_central_opportunity_explorer_niche_search_term_metrics.parquet")

df2.show()

I am getting the data in unstructured way when using show() function. I want them in rows and columns so they can be in readable form.

My spark is: 2.4.4

IDE: Juypter Notebook

How can I make this structure so data can be in readable form?