I have been looking for an answer without success (1,2,3) and a lot of the questions I have found about string aggregation involves only string aggregation when all the columns are strings. This is a mixed aggregation with some specific details.

The df:

df = pd.DataFrame({

'Group': ['Group_1', 'Group_1','Group_1', 'Group_1', 'Group_2', 'Group_2', 'Group_2', 'Group_2', 'Group_2', 'Group_2'],

'Col1': ['A','A','B',np.nan,'B','B','C','C','C','C'],

'Col2': [1,2,3,3,5,5,5,7,np.nan,7],

'Col3': [np.nan, np.nan, np.nan,np.nan,3,2,3,4,5,5],

'Col4_to_Col99': ['some value','some value','some value','some value','some value','some value','some value','some value','some value','some value']

})

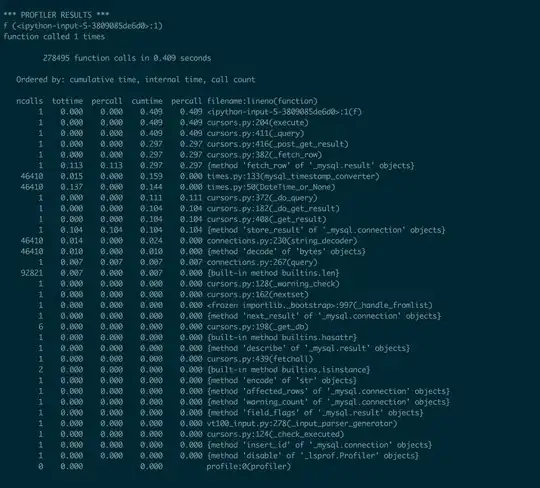

The function used for the output (source):

def join_non_nan_values(elements):

return ";".join([elem for elem in elements if elem == elem]) # elem == elem will fail for Nan values

The output:

df.groupby('Group')[['Col1', 'Col2', 'Col3']].agg({'Col1': join_non_nan_values, 'Col2': 'count', 'Col3':'mean'})

The output expected:

The output for Col1 and Col2 is a counting. The left side is the value, the right side is the count.

PD: If you know a more efficient way to implement join_non_nan_values function, you are welcome! (As it takes a while for it to run actually..) Just remember that it needs to skips missing values