I'm developing some python code that would be used as entry points for various wheel-based-workflows on Databricks. Given that it's under development, after I make code changes to test it, I need to build a wheel and deploy on Databricks cluster to run it (I use some functionality that's only available in Databricks runtime so can not run locally).

Here is what I do:

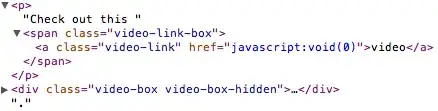

REMOTE_ROOT='dbfs:/user/kash@company.com/wheels'

cd /home/kash/workspaces/project

rm -rf dist

poetry build

whl_file=$(ls -1tr dist/project-*-py3-none-any.whl | tail -1 | xargs basename)

echo 'copying..' && databricks fs cp --overwrite dist/$whl_file $REMOTE_ROOT

echo 'installing..' && databricks libraries install --cluster-id 111-222-abcd \

--whl $REMOTE_ROOT/$whl_file

# ---- I WANT TO AVOID THIS as it takes time ----

echo 'restarting' && databricks clusters restart --cluster-id 111-222-abcd

# Run the job that uses some modules from the wheel we deployed

echo 'running job..' && dbk jobs run-now --job-id 1234567

Problem is every time I make one line of change I need to restart the cluster which takes 3-4 minutes. And unless I restart the cluster databricks libraries install does not reinstall the wheel.

I've tried updating the version number for the wheel, but then it shows that the cluster has two versions of same wheel installed on the GUI (Compute -> Select-cluster -> Libraries-tab), but on the cluster itself the newer version is actually not installed (verified using ls -l .../site-packages/).