I have an input dataset that has 4 time series with 288 values for 80 days. So the actual shape is (80,4,288). I would like to cluster differnt days. I have 80 days and all of them have 4 time series: outside temperature, solar radiation, electrical demand, electricity prices. What I want is to group similar days with regard to these 4 time series combined into clusters. Days belonging to the same cluster should have similar time series.

Before clustering the days using k-means or Ward's method, I would like to scale them using scikit learn. For this I have to transform the data into a 2 dimensional shape array with the shape (80, 4*288) = (80, 1152), as the Standard Scaler of scikit learn does not accept 3-dimensional input. The Standard Scaler just standardizes features by removing the mean and scaling to unit variance.

Now I scale this data using sckit learn's standard scaler:

import numpy as np

from sklearn.preprocessing import StandardScaler

import pandas as pd

data_Unscaled = pd.read_csv("C:/Users/User1/Desktop/data_Unscaled.csv", sep=";")

scaler = StandardScaler()

data_Scaled = scaler.fit_transform(data_Unscaled)

np.savetxt("C:/Users/User1/Desktop/data_Scaled.csv", data_Scaled, delimiter=";")

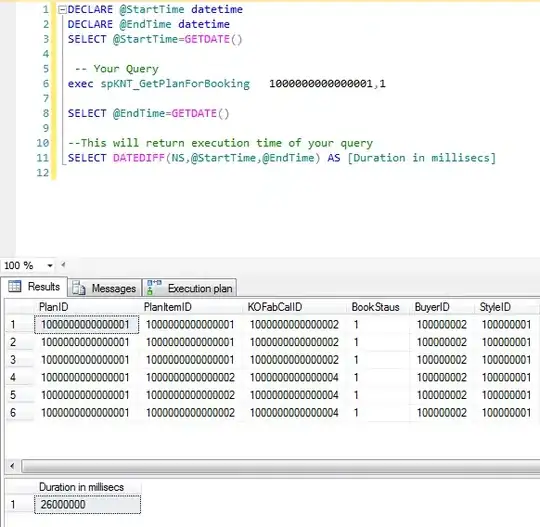

When I now compare the unscaled and scaled data e.g. for the first day (1 row) and the 4th time series (columns 864 - 1152 in the csv file), the results look quite strange as you can see in the following figure:

As far as I see it, they are not in line with each other. For example in the timeslots between 111 and 201 the unscaled data does not change at all whereas the scaled data fluctuates. I can't explain that. Do you have any idea why this is happening and why they don't seem to be in line?

Here is the unscaled input data with shape (80,1152): https://filetransfer.io/data-package/CfbGV9Uk#link

and here the scaled output of the scaling with shape (80,1152): https://filetransfer.io/data-package/23dmFFCb#link