tl;dr: when I calculate and visualize an FFT for any audio sample, the visualization is full of background noise to the point of swallowing the signal. Why?

Full question/details: I'm (long-term) attempting to make an audio fingerprinter following the blog post here. The code given is incomplete and this is my first time doing this kind of audio processing, so I'm filling in blanks in both the code and my knowledge as I go.

The post first explains running the audio sample through a windowed FFT. I'm using the Apache Commons FastFourierTransform class for this, and I've sanity checked some very simple bit patterns against their computed FFTs with good results.

The post then detours into making a basic spectrum analyzer to confirm that the FFT is working as intended, and here's where I see my issue.

The post's spectrum analyzer is very simple code. results is a Complex[][] containing the raw results of the FFT.

for(int i = 0; i < results.length; i++) {

int freq = 1;

for(int line = 1; line < size; line++) {

// To get the magnitude of the sound at a given frequency slice

// get the abs() from the complex number.

// In this case I use Math.log to get a more managable number (used for color)

double magnitude = Math.log(results[i][freq].abs()+1);

// The more blue in the color the more intensity for a given frequency point:

g2d.setColor(new Color(0,(int)magnitude*10,(int)magnitude*20));

// Fill:

g2d.fillRect(i*blockSizeX, (size-line)*blockSizeY,blockSizeX,blockSizeY);

// I used a improviced logarithmic scale and normal scale:

if (logModeEnabled && (Math.log10(line) * Math.log10(line)) > 1) {

freq += (int) (Math.log10(line) * Math.log10(line));

} else {

freq++;

}

}

}

The post's visualization results as shown are good quality. This is a picture of a sample from Aphex Twin's song "Equation", which has an image of the artist's face encoded into it:

Indeed, when I take a short sample from the song (starting around 5:25) and run it through the online spectrum analyzer here for a sanity check, I get a pretty legible rendition of the face:

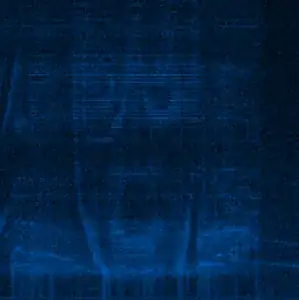

But my own results on the exact same audio file are a lot noisier, to the point that I have to mess with the spectrum analyzer's colors just to get something to show at all, and I never get to see the full face:

I get this kind of heavy background noise with any audio sample I try, across a variety of factors - MP3 or WAV, mono or stereo, short sample or long sample, a simple audio pattern or a complex song.

I've experimented with different FFT window sizes, conversion from raw FFT frequency output to power or dB, and different ways of visualizing the FFT output just in case the issue is with the visualization. None of that has helped.

I looked up the WebAudio implementation behind the Academo online spectrum analyzer, and it looks like there's a lot going on there: a Blackman window instead of my simple rectangular window to smooth the audio sampling; an interesting FFT with a built-in multiplication by 1/N, which seems to match the Unitary normalization provided by Apache Commons' FFT class; a smoothing function on the frequency data; and conversion from frequency values to dB to top it all off. Just for fun, I tried mimicking the WebAudio setup, but with about the same or even worse noise in the results, which suggests the issue is in the FFT step rather than any of the pre or post processing. I'm not sure how this can be the case when the FFT passes my basic calculation checks. I suppose the issue could be in the audio reading step, that I'm passing garbage into the FFT and getting garbage back, but I've experimented with reading the audio file and immediately writing a copy back to disk, and the new copy sounds just fine.

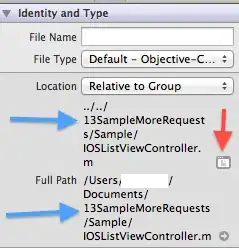

Here's a simplified version of my code that demonstrates the issue:

//Application.java

import java.io.File;

import java.io.IOException;

import javax.sound.sampled.AudioFormat;

import javax.sound.sampled.AudioInputStream;

import javax.sound.sampled.AudioSystem;

import javax.sound.sampled.UnsupportedAudioFileException;

public class Application {

public static void main(String[] args) {

sanityCheckFft();

File inputFile = new File("C:\\Aphex Twin face.mp3");

AudioProcessor audioProcessor = new AudioProcessor();

SpectrumAnalyzer debugSpectrumAnalyzer = new SpectrumAnalyzer();

try {

AudioInputStream audioStream = readAudioFile(inputFile);

byte[] bytes = audioStream.readAllBytes();

AudioFormat audioFormat = audioStream.getFormat();

FftChunk[] fft = audioProcessor.calculateFft(bytes, audioFormat, 4096);

debugSpectrumAnalyzer.debugFftSpectrum(fft);

}

catch (Exception e) {

e.printStackTrace();

}

}

//https://github.com/hendriks73/ffsampledsp#usage

private static AudioInputStream readAudioFile(File file) throws IOException, UnsupportedAudioFileException {

// compressed stream

AudioInputStream mp3InputStream = AudioSystem.getAudioInputStream(file);

// AudioFormat describing the compressed stream

AudioFormat mp3Format = mp3InputStream.getFormat();

// AudioFormat describing the desired decompressed stream

int sampleSizeInBits = 16;

int frameSize = 16 * mp3Format.getChannels() / 8;

AudioFormat pcmFormat = new AudioFormat(AudioFormat.Encoding.PCM_SIGNED,

mp3Format.getSampleRate(),

sampleSizeInBits,

mp3Format.getChannels(),

frameSize,

mp3Format.getSampleRate(),

mp3Format.isBigEndian());

// actually decompressed stream (signed PCM)

final AudioInputStream pcmInputStream = AudioSystem.getAudioInputStream(pcmFormat, mp3InputStream);

return pcmInputStream;

}

private static void sanityCheckFft() {

AudioProcessor audioProcessor = new AudioProcessor();

//pattern 1: one block

byte[] bytePattern1 = new byte[] {2, 1, -1, 5, 0, 3, 0, -4};

FftChunk[] fftResults1 = audioProcessor.calculateFft(bytePattern1, null, 8);

//expected results: [6 + 0J, -5.778 - 3.95J, 3 + -3J, 9.778 - 5.95J, -4 + 0J, 9.778 + 5.95J, 3 + 3J, -5.778 + 3.95J]

//expected results verified with https://engineering.icalculator.info/discrete-fourier-transform-calculator.html

//pattern 2: two blocks

byte[] bytePattern2 = new byte[] {2, 1, -1, 5, 0, 3, 0, -4};

FftChunk[] fftResults2 = audioProcessor.calculateFft(bytePattern1, null, 4);

//expected results: [7 + 0J, 3 + 4J, -5 + 0J, 3 - 4J], [-1 + 0J, 0 - 7J, 1 + 0J, 0 + 7J]

//expected results verified with https://engineering.icalculator.info/discrete-fourier-transform-calculator.html

/* pattern 3

* "Try a signal of alternate ones and negative ones with zeros between each. (i.e. 1,0,-1,0, 1,0,-1,0, ...) For a real FFT of length 1024, this should give you a single peak at out[255] ( the 256th frequency bin)"

* - https://stackoverflow.com/questions/8887896/why-does-my-kiss-fft-plot-show-duplicate-peaks-mirrored-on-the-y-axis#comment11127476_8887896

*/

byte[] bytePattern3 = new byte[1024];

byte[] pattern3Phases = new byte[] {1, 0, -1, 0};

for (int pattern3Index = 0; pattern3Index < bytePattern3.length; pattern3Index++) {

int pattern3PhaseIndex = pattern3Index % pattern3Phases.length;

byte pattern3Phase = pattern3Phases[pattern3PhaseIndex];

bytePattern3[pattern3Index] = pattern3Phase;

}

FftChunk[] fftResults3 = audioProcessor.calculateFft(bytePattern3, null, 1024);

//expected results: 0s except for fftResults[256]

}

}

//AudioProcessor.java

import javax.sound.sampled.AudioFormat;

import org.apache.commons.math3.complex.Complex;

import org.apache.commons.math3.transform.DftNormalization;

import org.apache.commons.math3.transform.FastFourierTransformer;

import org.apache.commons.math3.transform.TransformType;

public class AudioProcessor {

public FftChunk[] calculateFft(byte[] bytes, AudioFormat audioFormat, int debugActualChunkSize) {

//final int BITS_PER_BYTE = 8;

//final int PREFERRED_CHUNKS_PER_SECOND = 60;

/* turn the audio bytes into chunks. Each chunk represents the audio played during a certain window of time, defined by the audio's play rate (frame rate * frame size = the number of bytes processed per second)

* and the number of chunks we want to cut each second of audio into.

* frame rate * frame size = 1 second worth of bytes

* if we divide each second worth of data into chunksPerSecond chunks, that gives us:

* 1 chunk in bytes = 1 second in bytes / chunksPerSecond

* 1 chunk in bytes = frame rate * frame size / chunksPerSecond

*/

//float oneSecondByteLength = audioFormat.getChannels() * audioFormat.getSampleRate() * (audioFormat.getSampleSizeInBits() / BITS_PER_BYTE);

//int preferredChunkSize = (int)(oneSecondByteLength / PREFERRED_CHUNKS_PER_SECOND);

//int actualChunkSize = getPreviousPowerOfTwo(preferredChunkSize);

int chunkCount = bytes.length / debugActualChunkSize;

FastFourierTransformer fastFourierTransformer = new FastFourierTransformer(DftNormalization.STANDARD);

FftChunk[] fftResults = new FftChunk[chunkCount];

//set up each chunk individually for FFT processing

for (int timeIndex = 0; timeIndex < chunkCount; timeIndex++) {

//to map the input into the frequency domain, we need complex numbers (we only use the normal half of the Complex, but we need to provide & receive the entire Complex value)

Complex[] currentChunkComplexRepresentation = new Complex[debugActualChunkSize];

for (int currentChunkIndex = 0; currentChunkIndex < debugActualChunkSize; currentChunkIndex++) {

//get the next byte in the current audio chunk

int currentChunkCurrentByteIndex = (timeIndex * debugActualChunkSize) + currentChunkIndex;

byte currentChunkCurrentByte = bytes[currentChunkCurrentByteIndex];

//put the time domain data into a complex number with imaginary part as 0

currentChunkComplexRepresentation[currentChunkIndex] = new Complex(currentChunkCurrentByte, 0);

}

//perform FFT analysis on the chunk

Complex[] currentChunkFftResults = fastFourierTransformer.transform(currentChunkComplexRepresentation, TransformType.FORWARD);

FftChunk fftResult = new FftChunk(currentChunkFftResults);

fftResults[timeIndex] = fftResult;

}

return fftResults;

}

}

//FftChunk.java

import org.apache.commons.math3.complex.Complex;

import lombok.Data;

import lombok.RequiredArgsConstructor;

@Data

@RequiredArgsConstructor

public class FftChunk {

private final Complex[] fftResults;

}

//SpectrumAnalyzer.java

import java.awt.Color;

import java.awt.Dimension;

import java.awt.Graphics;

import java.awt.Graphics2D;

import java.awt.image.BufferedImage;

import javax.swing.JComponent;

import javax.swing.JFrame;

import javax.swing.JScrollPane;

import javax.swing.ScrollPaneConstants;

import javax.swing.WindowConstants;

import org.apache.commons.math3.complex.Complex;

public class SpectrumAnalyzer {

private JFrame frame;

private SpectrumAnalyzerComponent spectrumAnalyzerComponent;

public void debugFftSpectrum(FftChunk[] spectrum) {

Dimension windowSize = new Dimension(1000, 600);

spectrumAnalyzerComponent = new SpectrumAnalyzerComponent();

JScrollPane scrollPanel = new JScrollPane(spectrumAnalyzerComponent);

scrollPanel.setHorizontalScrollBarPolicy(ScrollPaneConstants.HORIZONTAL_SCROLLBAR_ALWAYS);

scrollPanel.setPreferredSize(windowSize);

frame = new JFrame();

frame.add(scrollPanel);

frame.setSize(windowSize);

frame.setVisible(true);

frame.setDefaultCloseOperation(WindowConstants.EXIT_ON_CLOSE);

spectrumAnalyzerComponent.analyze(spectrum);

}

}

@SuppressWarnings("serial")

class SpectrumAnalyzerComponent extends JComponent {

private FftChunk[] spectrum;

private boolean useLogScale = true;

private int blockSizeX = 1;

private int blockSizeY = 1;

private BufferedImage cachedImage;

public void analyze(FftChunk[] spectrum) {

this.spectrum = spectrum;

if (spectrum == null) {

cachedImage = null;

}

else {

int newWidth = (spectrum.length * blockSizeX) + blockSizeX;

int newHeight = 0;

for (FftChunk audioChunk : spectrum) {

Complex[] chunkFftResults = audioChunk.getFftResults();

int chunkHeight = calculatePixelHeight(chunkFftResults);

if (chunkHeight > newHeight) {

newHeight = chunkHeight;

}

}

Dimension newSize = new Dimension(newWidth, newHeight);

this.setPreferredSize(newSize);

this.setSize(newSize);

this.revalidate();

cachedImage = new BufferedImage(newWidth, newHeight, BufferedImage.TYPE_INT_RGB);

drawSpectrum(cachedImage.createGraphics());

}

this.repaint(); //force an immediate redraw

}

@Override

public void paint(Graphics graphics) {

if (cachedImage != null) {

graphics.drawImage(cachedImage, 0, 0, null);

}

}

//based on the spectrum analyzer from https://www.royvanrijn.com/blog/2010/06/creating-shazam-in-java/

private void drawSpectrum(Graphics2D graphics) {

if (this.spectrum == null) {

return;

}

int windowHeight = this.getSize().height;

for (int timeIndex = 0; timeIndex < spectrum.length; timeIndex++) {

System.out.println(String.format("Drawing time chunk %d/%d", timeIndex + 1, spectrum.length));

FftChunk currentChunk = spectrum[timeIndex];

Complex[] currentChunkFftResults = currentChunk.getFftResults();

int fftIndex = 0;

int yIndex = 1;

/* each chunk contains N elements, where N is the size of the FFT window. The first N/2 elements are positive and the last N/2 elements are negative, but they're otherwise mirrors

* of each other. We only want the positive half.

* Additionally, because we're working with audio samples, our FFT is a "real" FFT (FFT on real numbers -

* https://stackoverflow.com/questions/8887896/why-does-my-kiss-fft-plot-show-duplicate-peaks-mirrored-on-the-y-axis/10744384#10744384 ), which produces a mirror of its own inside

* the positive elements. We need to further divide the positive elements in half. This leaves us with the first N/4 elements after all is said and done.

*/

while (fftIndex < currentChunkFftResults.length / 4) {

Complex currentChunkFftResult = currentChunkFftResults[fftIndex];

// To get the magnitude of the sound at a given frequency slice

// get the abs() from the complex number.

// In this case I use Math.log to get a more managable number (used for color)

double magnitude = Math.log10(currentChunkFftResult.abs() + 1);

// The more blue in the color the more intensity for a given frequency point:

/*int red = 0;

int green = (int) magnitude * 10;

int blue = (int) magnitude * 20;

graphics.setColor(new Color(red, green, blue));*/

float hue = (float)(magnitude / 255 * 100);

int colorValue = Color.HSBtoRGB(hue, 100, 50);

graphics.setColor(new Color(colorValue));

// Fill:

graphics.fillRect(timeIndex * blockSizeX, (windowHeight - yIndex) * blockSizeY, blockSizeX, blockSizeY);

// I used an improvised logarithmic scale and normal scale:

int normalScaleFrequencyDelta = 1;

int logScaleFrequencyDelta = (int)(Math.log10(yIndex) * Math.log10(yIndex));

if (logScaleFrequencyDelta < 1) {

logScaleFrequencyDelta = 1;

}

if (useLogScale) {

fftIndex = fftIndex + logScaleFrequencyDelta;

}

else {

fftIndex = fftIndex + normalScaleFrequencyDelta;

}

yIndex = yIndex + 1;

}

}

}

private int calculatePixelHeight(Complex[] fftResults) {

int fftIndex = 1;

int tempPixelCount = 1;

int pixelCount = 1;

while (fftIndex < fftResults.length / 4) {

pixelCount = tempPixelCount;

int normalScaleFrequencyDelta = 1;

int logScaleFrequencyDelta = (int)(Math.log10(tempPixelCount) * Math.log10(tempPixelCount));

if (logScaleFrequencyDelta < 1) {

logScaleFrequencyDelta = 1;

}

if (useLogScale) {

fftIndex = fftIndex + logScaleFrequencyDelta;

}

else {

fftIndex = fftIndex + normalScaleFrequencyDelta;

}

tempPixelCount = tempPixelCount + 1;

}

return pixelCount;

}

}

//build.gradle

plugins {

//Java application plugin

id 'application'

//Project Lombok plugin

id 'io.freefair.lombok' version '6.5.0.2'

}

repositories {

// Use Maven Central for resolving dependencies.

mavenCentral()

}

dependencies {

implementation 'com.tagtraum:ffsampledsp-complete:0.9.46'

implementation 'org.apache.commons:commons-math3:3.6.1'

}