I have Selenium opening many pdfs for me from Google Search (using f"https://www.google.com/search?q=filetype:pdf {search_term}" and then clicking on the first link)

I want to know which pages contain my keyword WITHOUT downloading the pdf first. I believe I can use

Ctrl+F --> keyword --> {scrape page number} --> Tab (next keyword) --> {scrape page number} --> ... --> switch to next PDF

How can I accomplish the {scrape page number} part?

Context

For each PDF I need to grab these numbers as a list or in a Pandas DataFrame or anything I can use to feed in camelot.read_pdf() later

The idea is also once I have these page numbers, I can selectively download pages of these pdfs and save on storage, memory and network speeds rather than downloading and parsing the entire pdf

Using BeautifulSoup

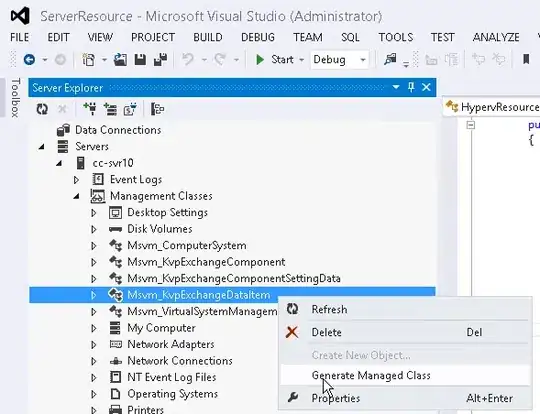

PDFs have a small gray box at the top with the current page number and total pages number with the option to skip around the PDF.

<input data-element-focusable="true" id="pageselector" class="c0191 c0189" type="text" value="151" title="Page number (Ctrl+Alt+G)" aria-label="Go to any page between 1 and 216">

The value in this input tag contains the number I am looking for.

Other SO answers

I'm aware that reading PDFs programatically is challenging and I'm currently using this function (finding on which page a search string is located in a pdf document using python) to scrape the pdf pages having downloaded the whole PDF first. But Chrome searches PDFs pretty quickly with Ctrl+F which gives me inspiration I can use browser functionality to collect this data and I've already seen this data in the box at the top.

How do you keep the page numbers in a PDF where a keyword is present?