I have read the tutorial and API guide of Numpy, and I learned how to extend Numpy with my own C code or how to use C to call Numpy function from this helpful documentation.

However, what I really want to know is: how could I track the calling chain from python code to C implementation? Or i.e. how could I know which part of its C implementation corresponds to this simple numpy array addition?

x = np.array([1, 2, 3])

y = np.array([1, 2, 3])

print(x + y)

Can I use some tools like gdb to track its stack frame step by step?

Or can I directly recognize the corresponding codes from variable naming policy? (like if I want to know the code about addition, I can search for something like function PyNumpyArrayAdd(...) )

[EDIT] I found a very useful video about how to point out the C implementation of these basic C-implemented function or operator overrides like "+" "-".

https://www.youtube.com/watch?v=mTWpBf1zewc

Got this from Andras Deak via Numpy mailing-list.

[EDIT2] There is another way to track all the functions called in Numpy using gdb. It's very heavy because it will display all the functions in Numpy that are called, including these trivial ones. And it might take some time.

First you need to download/clone the Numpy repository to your own working space and then compile it with -g option, which will attach debug informations for debugging.

Then you open a terminal in the "path/to/numpy-main" directory where the setup.py of Numpy lies, and then run gdb.

If you want to know what functions in Numpy's C implementation are called in this single python statement:

y = np.exp(x)

you can set breakpoints on all the functions implemented by Numpy using this gdb python script provided by the first answer here: Can gdb set break at every function inside a directory?

Once you load this python script by source somename.py, you can run this command in gdb: rbreak-dir numpy/core/src

And you can set commands for each breakpoint:

commands 1-5004

> silent

> bt 1

> c

> end

(here 1-5004 is the range of the breakpoints that you want to run commands on)

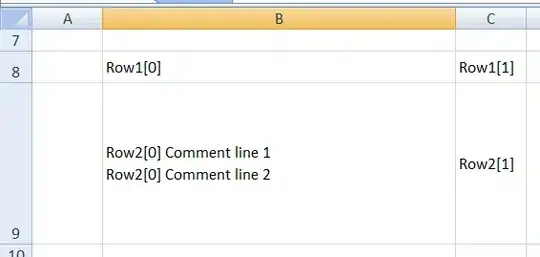

Once a breakpoint is activated, this command will run and print the first layer of backtrace (which is the info of the current function you are in) and then continue. In this way, you can track all the functions in Numpy, and this is a pic from my own working environment (I took a snapshot since there are rules preventing copying any byte from working computer):

Hope my trials can help the future comers.