I would like to scrape data from this page: https://www.investing.com/equities/nvidia-corp-financial-summary.

There are two buttons that I'd like to click:

Checking the XPath of the button: XPath = //*[@id="onetrust-accept-btn-handler"]

Replicating the steps performed here: Clicking a button with selenium using Xpath doesn't work

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

wait = WebDriverWait(driver, 5)

link= wait.until(EC.element_to_be_clickable((By.XPATH, "//*[@id="onetrust-accept-btn-handler")))

I got the error: SyntaxError: invalid syntax

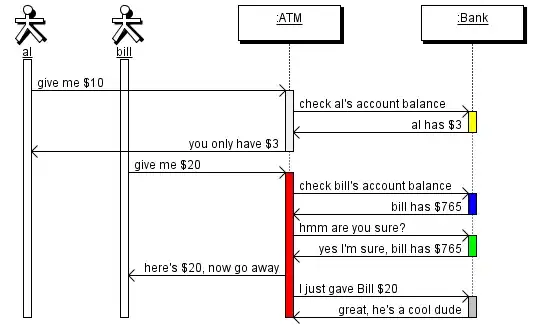

- Annual button there is a toggle between Annual and Quarterly (default is quarterly)

XPath is

XPath is //*[@id="leftColumn"]/div[9]/a[1]

wait.until(EC.element_to_be_clickable((By.XPATH, "//*[@id="leftColumn"]/div[9]/a[1]")))

also returned invalid Syntax.

Updated Code

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

company = 'nvidia-corp'

driver = webdriver.Chrome(path)

driver.get(f"https://www.investing.com/equities/{company}-financial-summary")

wait = WebDriverWait(driver, 2)

accept_link= wait.until(EC.element_to_be_clickable((By.XPATH, '//*[@id="onetrust-accept-btn-handler"]')))

accept_link.click()

scrollDown = "window.scrollBy(0,500);"

driver.execute_script(scrollDown)

#scroll down to get the page data below the first scroll

driver.maximize_window()

time.sleep(10)

wait = WebDriverWait(driver, 2)

scrollDown = "window.scrollBy(0,4000);"

driver.execute_script(scrollDown)

#scroll down to get the page data below the first scroll

try:

close_popup_link= wait.until(EC.element_to_be_clickable((By.XPATH,'//*[@id="PromoteSignUpPopUp"]/div[2]/i')))

close_popup_link.click()

except NoSuchElementException:

print('No such element')

wait = WebDriverWait(driver, 3)

try:

annual_link = wait.until(EC.element_to_be_clickable((By.XPATH, '//*[@id="leftColumn"]/div[9]/a[1]')))

annual_link()

# break

except NoSuchElementException:

print('No element of that id present!')

The first accept button was successfully clicked, but clicking the Annual button returns Timeout Exception error.

"Annual" and the XPath is: //*[@id="leftColumn"]/div[9]/a[1]

I have added a new image to make it clearer. do you find it? – Luc Sep 03 '22 at 18:31