I'm running into an interesting problem with LightningChart where it seems to be corrupting or otherwise decimating my data depending on how far it is from the DateOrigin of the chart. My data is 1000 samples per second and I am trying to display 1-2 weeks of data at a time. I am using the ChartXY class, the x-axis type is set to "linear-highPrecision" which should have 1 ms accuracy, which is all I need and I don't need any more, I am creating a LineSeries and it's data pattern is 'ProgressiveX' and regularProgressiveStep: true.

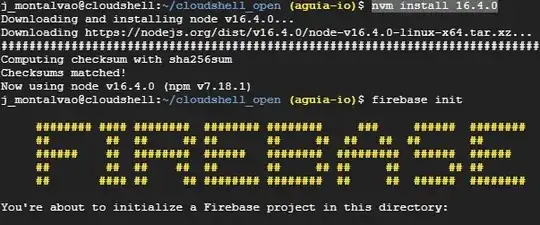

Here's what it looks like when the data is plotted near the DateOrigin.

Here's what it looks like zoomed in on the data near the DateOrigin.

That looks fantastic! And lightning chart is doing exactly what I want!

However, I would like this data to be offset correctly to show it's true absolute time.

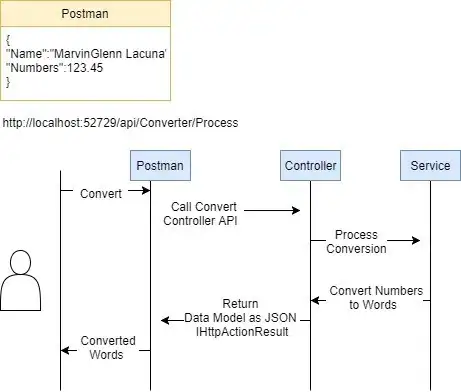

Here's what it looks like when I offset this data by 14 days. My code to set the relative offset looks like this.

ds.addArrayY(curve.data,step=1,start=14*24*60*60*1000)

Ok, it looks alright zoomed out, but what if we zoom in?

It's gone haywire! It looks like the X axis values are being coerced to some larger step of the X axis. It gets worse the further that you go out from the DateOrigin. My fear is that this is some built-in behavior of the engine and I am expecting too much, however, it says it has 1ms resolution, so I expect that to be respected.

Here's how I create the chart.

// Create a Line Chart.

const PumpsChart = lightningChart().ChartXY({

// Set the chart into a div with id, 'target'.

// Chart's size will automatically adjust to div's size.

theme: Themes.lightGradient,

container: 'PumpsChart',

defaultAxisX: {

type: 'linear-highPrecision'

}

}).setTitle('') // Set chart title

.setTitleFont(new FontSettings({

family: waveChartFontFamily,

size: 20

}))

.setMouseInteractionWheelZoom(false)

axisPumpsChartDateTime = PumpsChart.getDefaultAxisX()

.setTickStrategy(

AxisTickStrategies.DateTime,

(tickStrategy) => tickStrategy.setDateOrigin(waveDateOrigin))

axisPumpsChartPressurePSI = PumpsChart.getDefaultAxisY()

.setTitle("Pressure (PSI)")

.setInterval(0,10000,0,true)

Here's how I create the LineSeries

newDataSeries = targetChart.chart.addLineSeries(

{

yAxis: targetChart.axis,

dataPattern: {

pattern: 'ProgressiveX',

regularProgressiveStep: true,

}

}

);

Here's how I add data to the chart:

ds.addArrayY(curve.data,step=1,start=14*24*60*60*1000)

I would prefer not to use the AxisTickStrategies.DateTime over AxisTickStrategies.DateTime for a few reasons, my data spans weeks, 100 hours is too little, I am just fine with millisecond resolution, I don't need more than that, and I need to present my data in relative and not absolute time.

Hopefully there's some parameter that I missing that I can adjust to achieve this.

EDIT

Well, this corruption is also happening with Time tick strategy s well when the data is offset relative to the origin -636 hours. I tried this with and without ProgressiveX set as DataPattern.pattern.

**** EDIT 2 ****

Well, I even tried downSampling to 20 samples per second, and changed this back to AxisTickStrategies.DateTime, it's "squishing" all the points to this magic .25 second interval for some reason.