I have 3 tables Countries, states and cities,cites table have name, and Foreign key for states, i added 3 more fields lat, lang and captial in cites tables. in cites table i have 46,000 around data.

I have a master Table contain around 4.3 m records, which contain all fields which i need in a single table. my code is given

import pandas as pd

import pymysql

import time

from Levenshtein import distance

import csv

from functools import lru_cache

start_time = time.time()

connection=pymysql.connect(

host="localhost",

user="admin",

password="Adxxxxx3",

port=3306,

db="rixxxx")

mycursor = connection.cursor()

mycursor.execute("SET FOREIGN_KEY_CHECKS=0")

mycursor.execute("SELECT ci.name as city_Name, ci.id as city_id, s.name as state_name, s.id as state_id, co.name as country_name, co.id as country_id FROM countrycap_states s inner join countrycap_cities ci on ci.state_id = s.id inner join countrycap_countries co on co.id = s.country_id ORDER BY `country_name` ASC ")

count_val = mycursor.fetchall()

with open('zzzzcities_unfilled.csv', 'w', encoding='UTF8') as f:

for old_data in count_val:

try:

query_main_simp = "SELECT country, state, city,capital, latitude,longitude FROM `countrycap_simplecountry` where country = '{0}' and state = '{1}' and city = '{2}'".format(old_data[4], old_data[2], old_data[0])

mycursor.execute(query_main_simp)

ins_data = mycursor.fetchall()[0]

# print(ins_data[3])

if (ins_data[3] == 'admin') or (ins_data[3] == 'primary'):

in_ss ="UPDATE countrycap_cities w JOIN countrycap_states x on w.state_id=x.id JOIN countrycap_countries co on co.id=x.country_id SET w.latitude = {0}, w.longitude = {1}, w.capital_city_admin = '{2}' WHERE w.name='{3}' and co.name='{4}' and x.name ='{5}'".format(ins_data[4],ins_data[5],ins_data[3],ins_data[2],ins_data[0],ins_data[1])

mycursor.execute(in_ss)

# print(in_ss)

connection.commit()

elif (ins_data[3] == 'minor') or (ins_data[3] == ''):

in_ss ="UPDATE countrycap_cities w JOIN countrycap_states x on w.state_id=x.id JOIN countrycap_countries co on co.id=x.country_id SET w.latitude = {0}, w.longitude = {1} WHERE w.name='{2}' and co.name='{3}' and x.name ='{4}'".format(ins_data[4],ins_data[5],ins_data[2],ins_data[0],ins_data[1])

mycursor.execute(in_ss)

# print(in_ss)

connection.commit()

except Exception as e:

writer = csv.writer(f)

writer.writerow(old_data)

print("--- %s seconds ---" % (time.time() - start_time))

This Script takes hours(5-6) to complete task, i want make it quick in minutes. i have to filled lat and long for 46,000 only, what is best possible strategy to make script fast.

Thanks for your time.

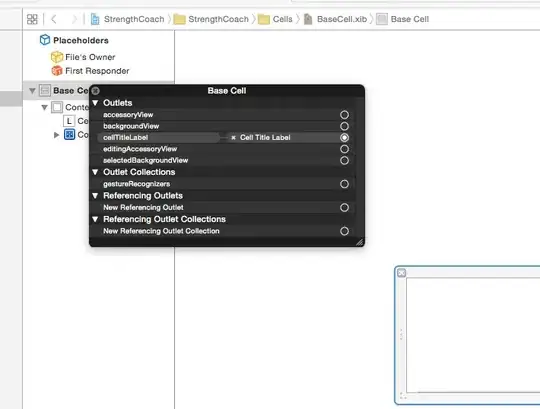

Here i'm attaching schema for related table, country, state and city

and Simple map country from where i have to fetch lat, long.enter image description here