I've created a simple script in order to understand the interaction between AzureML and AzureStorage in AzureML CLIv2.

I would like to download MNIST Dataset and store in on a datastore.

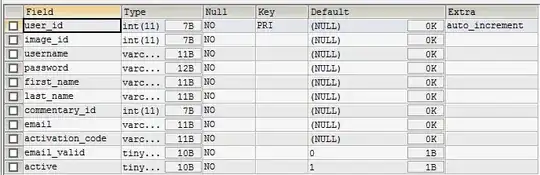

First, i declared my datastore in AzureML :

I've created a very simple script in order to download MNIST Dataset (torchvision) like that :

import os

import argparse

import logging

from torchvision.datasets import MNIST,CIFAR10

def main():

"""Main function of the script."""

# input and output arguments

parser = argparse.ArgumentParser()

parser.add_argument("--dst_dir", type=str, help="Directory where to write data")

parser.add_argument('--dataset_name',type=str,choices=['MNIST','CIFAR10'])

args = parser.parse_args()

print(vars(args))

root_path = os.path.join(args.dst_dir,args.dataset_name)

if args.dataset_name=="MNIST":

print(f"Download {args.dataset_name} => {root_path}")

data_train=MNIST(root=root_path,train=True,download=True)

data_test=MNIST(root=root_path,train=False,download=True)

elif args.dataset_name=="CIFAR10":

print(f"Download {args.dataset_name} => {root_path}")

data_train=CIFAR10(root=root_path,train=True,download=True)

data_test=CIFAR10(root=root_path,train=False,download=True)

else:

print("Unknown Dataset......")

if __name__ == "__main__":

main()

In order to launch the download on the correct datastore, i've created :

- An Environment (Working)

- A Compute Cluster (Working)

- An entry script :

from azure.ai.ml import MLClient

from azure.ai.ml import command

from azure.ai.ml import Input, Output

from azure.ai.ml.entities import Environment

from azure.identity import DefaultAzureCredential, InteractiveBrowserCredential

from azure.ai.ml.constants import AssetTypes,InputOutputModes

from datetime import datetime

## => CODE to get Environment

## => CODE to get Compute

component_name = f"DataWrapper-{datetime.now().strftime('%Y%m%d%H%M%S')}"

print(component_name)

data_wrapper_component = command(

name=component_name,

display_name=component_name,

description="Download a TorchVision Dataset in AzureStorage...",

inputs={

"dataset_name":"MNIST",

},

outputs={

"dst_dir":Output(

type=AssetTypes.URI_FOLDER,

folder="azureml://datastores/torchvision_data",

mode=InputOutputModes.RW_MOUNT),

},

# The source folder of the component

code="./code", # On ajoute tout le code folder...

command="""python components/datawrapper/datawrapper.py \

--dst_dir ${{outputs.dst_dir}} \

--dataset_name ${{inputs.dataset_name}}

""",

compute=cpu_compute_target,

experiment_name="datawrapper",

is_deterministic=False,

environment=f"{pipeline_job_env.name}:{pipeline_job_env.version}"

)

returned_job = ml_client.create_or_update(data_wrapper_component)

aml_url = returned_job.studio_url

print("Monitor your job at", aml_url)

The job is executed correctly, but the datastore is still empty :

I tryed to replace :

folder="azureml://datastores/torchvision_data"

by

path="azureml://datastores/torchvision_data"

My source documentation are :

- https://github.com/Azure/azureml-examples/blob/main/sdk/python/resources/datastores/datastore.ipynb

- https://learn.microsoft.com/fr-fr/azure/machine-learning/how-to-read-write-data-v2?tabs=cli

- https://learn.microsoft.com/fr-fr/azure/machine-learning/how-to-read-write-data-v2?tabs=python#write-data-in-a-job

Did i make something wrong when i mount the output Folder ?

Thanks,