You are correct that the loss of precision is due to how floating point numbers are represented under the hood.

If you'd like to do floating point arithmetic without a loss of precision at this granularity, you'll need to use a special library. Example: BigDecimal (https://docs.oracle.com/javase/7/docs/api/java/math/BigDecimal.html)

If you're still interested in the why this is happening part, then that's next. It's a little bit more complicated than just using a new library.

The first thing to note is that at the end of the day, on computers, it's all just binary and everything needs to have a binary representation. The strings in your program have binary representations. The integers do. The program itself does. And so do floating point values.

You can stop reading for a moment and imagine how you would represent a fractional value in binary... it's a tricky problem. There are actually two main solutions to this problem that I personally am aware of (and I'm not claiming to be aware of all of them).

The first is one that you may not have heard of called fixed-point representation. As its name suggests, in fixed-point representations, the "dot" character is locked in place depending on how you're interpreting the bits. The idea is that in the binary, you encode a constant number of digits after the decimal point. This is not the point of this post, so I'll move on but I wanted to mention it. More info: https://en.wikipedia.org/wiki/Fixed-point_arithmetic

The second method which is much more common is called floating-point representation. The widespread standard for floating-point representation is the IEEE 754: https://en.wikipedia.org/wiki/IEEE_754 ... this is the standard that is responsible for the loss of precision that you're seeing today! The key idea with floating point numbers is that they implement what you probably know as scientific notation. In decimal:

5.7893 * 10^4 == 57893

How does this work in binary? More on that next.

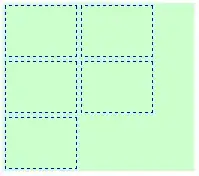

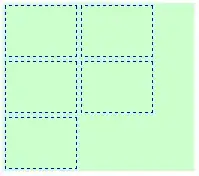

There are a variety of different formats outlined by IEEE 754 to represent fractional values using different numbers of bits (i.e 16 bits, 32 bits, 64 bits, and so on). A Java double is a 64-bit IEEE 754 floating-point value. The 64 bits of a double are divided like so:

The bits fields above have the following roles:

The "sign" bit signals whether or not the number is negative or not where 1 indicates negative and 0 indicates non-negative.

The "exponent" bits serve as the exponent of your scientific notation (the 4 in 5.7893 * 10^4).

The "fraction" bits (aka the "mantissa") represents the fractional part of your scientific notation (the 7893 of 5.7893 * 10^4).

This might make you wonder, how do we represent the 5 in 5.7894 * 10^4 if we've already used all the bits? The answer is that since we don't represent these values in decimal (we're using binary), we can imply the first digit of the number. For example, we could write:

(BINARY) 0.111 * 10^11 == (DECIMAL) 0.875 * 2^3 (How did I do this conversion? See footnote.)

But there's actually a clever trick. Instead, since all bits are either a 1 or 0, we can choose to write that same value as:

(BINARY) 1.11 * 10^10 == (DECIMAL) 1.75 * 2^2

Note the single, leading 1 in 1.11. If we choose to write all floating-point values with a single leading 1, then we can just assume either:

- That the value starts with a 1 bit.

OR

- The value is 0.

This is the trick that the IEEE 754 standard uses. It assumes that if your "exponent" is not zero, then you must be encoding a value with a leading 1. This means that bit doesn't have to be stored in the representation, gaining all of us one extra bit of precision. (There is more nuance with the exponent bits than I am sharing here. Look up "IEEE 754 exponent bias" to learn more.)

So now you know the situation. We have 1 sign bit, 11 exponent bits, and 52 bits to represent the fractional part of your number. So why are we losing precision? The answer is because 52 bits just isn't enough bits to represent the entire real number line (you'd actually need infinite bits to represent it).

Because certain values can't be compute by adding together reciprocals of powers of two (1/2, 1/4, 1/8, 1/16, etc.), it is possible (certain, almost) that you'll try to represent a real number that cannot be expressed in 52 bits of precision. For example, the decimal value 1/10 cannot be expressed in binary. Let's try:

Binary | Decimal

----------------------------

0.1 | 0.5 <-- too big.

0.01 | 0.25 <-- too big.

0.001 | 0.125 <-- ...

0.0001 | 0.0625 <-- small enough! Lets use this bit first.

0.00011 | 0.09375 <-- closer...

0.000111 | 0.109375 <-- too big again!

0.0001101 | 0.1015625

0.00011001 | 0.09765625

0.000110011 | 0.099609375

0.0001100111 | 0.1005859375

0.00011001101 | ... this is gonna take awhile.

As you might be able to see, you end up falling into an infinite loop. Much like Base 10 is not capable of representing 1/3 (0.33333333...), binary is not capable of representing 1/10 (0.00011001100110011...).

This is why when you use Java's double precision floating point value data type, you still lose precision.

Footnote: In binary, 1010 == 10 in decimal. We know this because:

(2^3) * 1 + (2^2) * 0 + (2^1) * 1 + (2^0) * 0 == 10

Similarly, with fractional binary:

0.1 == 2^(-1) == 0.5

0.01 == 2^(-2) == 0.25

0.001 == 2^(-3) == 0.0125

... and so on.

So 0.111 binary == 0.875 decimal.